128k whitespace tokens, by accident: debugging the Responses API

View the series

- See how I used the OpenAI API to generate audio and images

- See why structured outputs also need hard guardrails

- Grab ready-to-use pytest snippets mocking the OpenAI API

- Add context to OpenAI API error messages for easier debugging

- Learn how to log OpenAI API calls in JSON format

- Learn how I parametrized tests and generated test data with GPT-5.2

- Cut down your Python import time with this 6-step method

Always set the

max_output_tokens

request parameter in the

OpenAI Responses API ↗. This is my new rule.

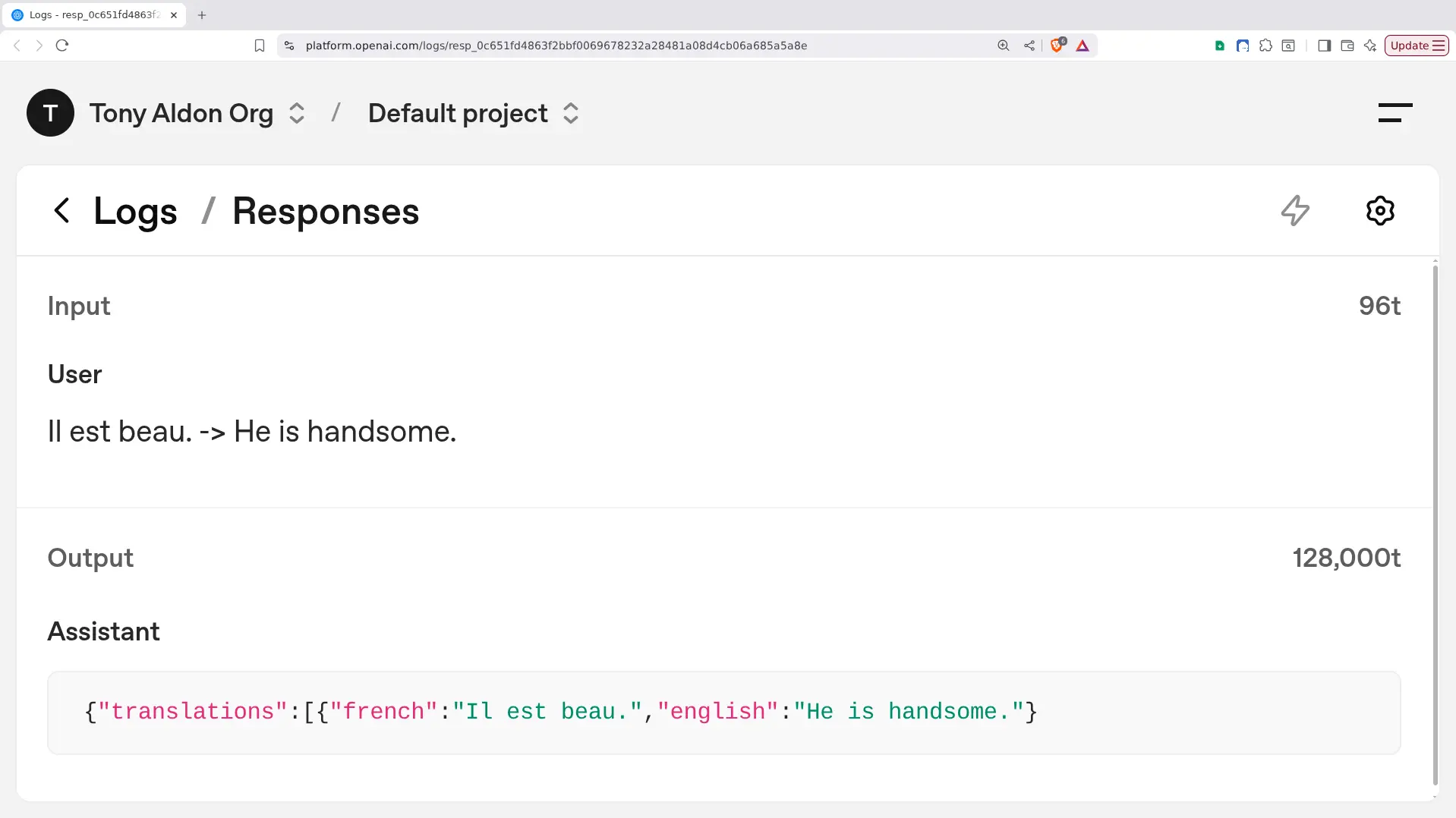

While testing phrasebook-fr-to-en CLI, for some reason the API generated 128k tokens, the maximum. I expected a JSON like this:

{

"translations": [

{

"french": "fr2",

"english": "en2"

},

{

"french": "fr3",

"english": "en3"

}

]

}I used their structured output ↗ feature and defined the expected JSON schema with this Pydantic ↗ model:

# DON'T USE THIS CODE. IT MAKES THE REPSONSES API BUG.

from openai import OpenAI

from pydantic import BaseModel, conlist

client = OpenAI()

class Translation(BaseModel):

french: str

english: str

class Translations(BaseModel):

# BUG CAUSED BY THIS FOLLOWING LINE

translations: conlist(Translation, min_length=2, max_length=2)

response = client.responses.parse(

model="gpt-5.2",

input="Il est beau. -> He is handsome.",

text_format=Translations,

)

But what I got was an incomplete JSON with more

than 63,000 whitespaces. In total, it hit exactly

128k tokens. If I had set the

max_output_tokens request

parameter to 256, it would have stopped at 256.

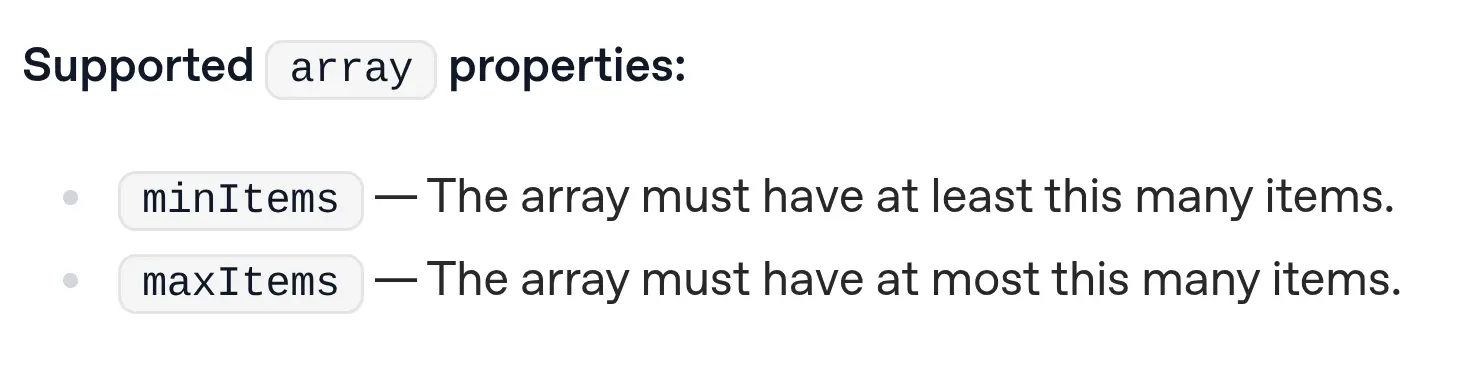

The bug seems to kick in because of the

minItems and

maxItems array fields in the

JSON schema. I set both to 2 with

conlist(Translation, min_length=2, max_length=2)

in the Pydantic model. Per

the docs ↗

this should have worked:

And it did, once I removed the length condition on the array, the API responded the right way:

class Translations(BaseModel):

translations: list[Translation]Lesson learned:

Always set the

max_output_tokensrequest parameter in the OpenAI Responses API ↗.

No spam. Unsubscribe anytime.

That's all I have for today! Talk soon 👋