Improve your docs by giving your AI assistant the project's issues

Recently, I ran into a virtual keyboard bug while using Algolia's Autocomplete ↗ library that was new to me: on Samsung phones, characters were disappearing while typing.

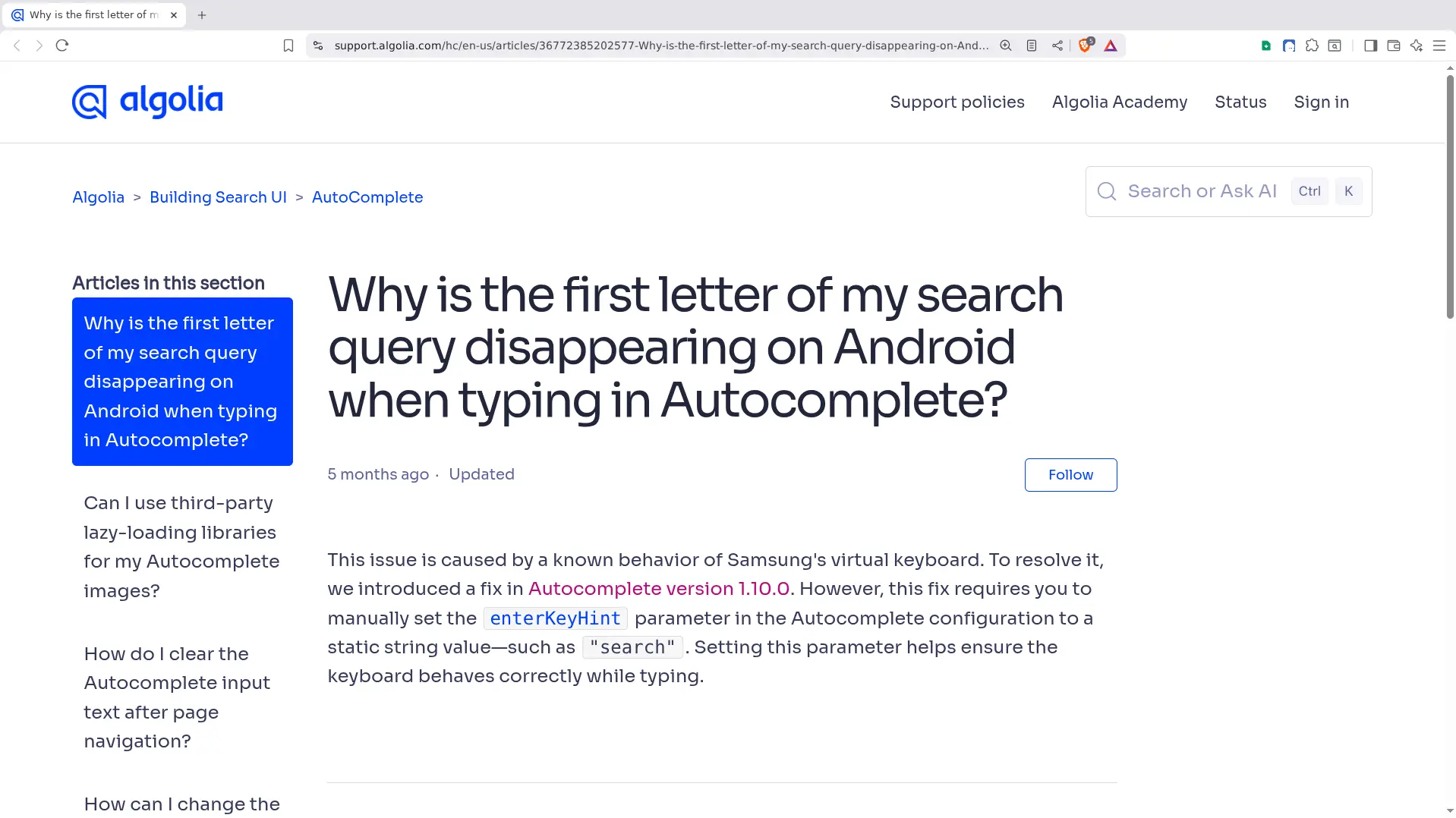

This is a known bug with a simple fix: set the

enterKeyHint parameter to

"search" when you

instantiate Autocomplete.

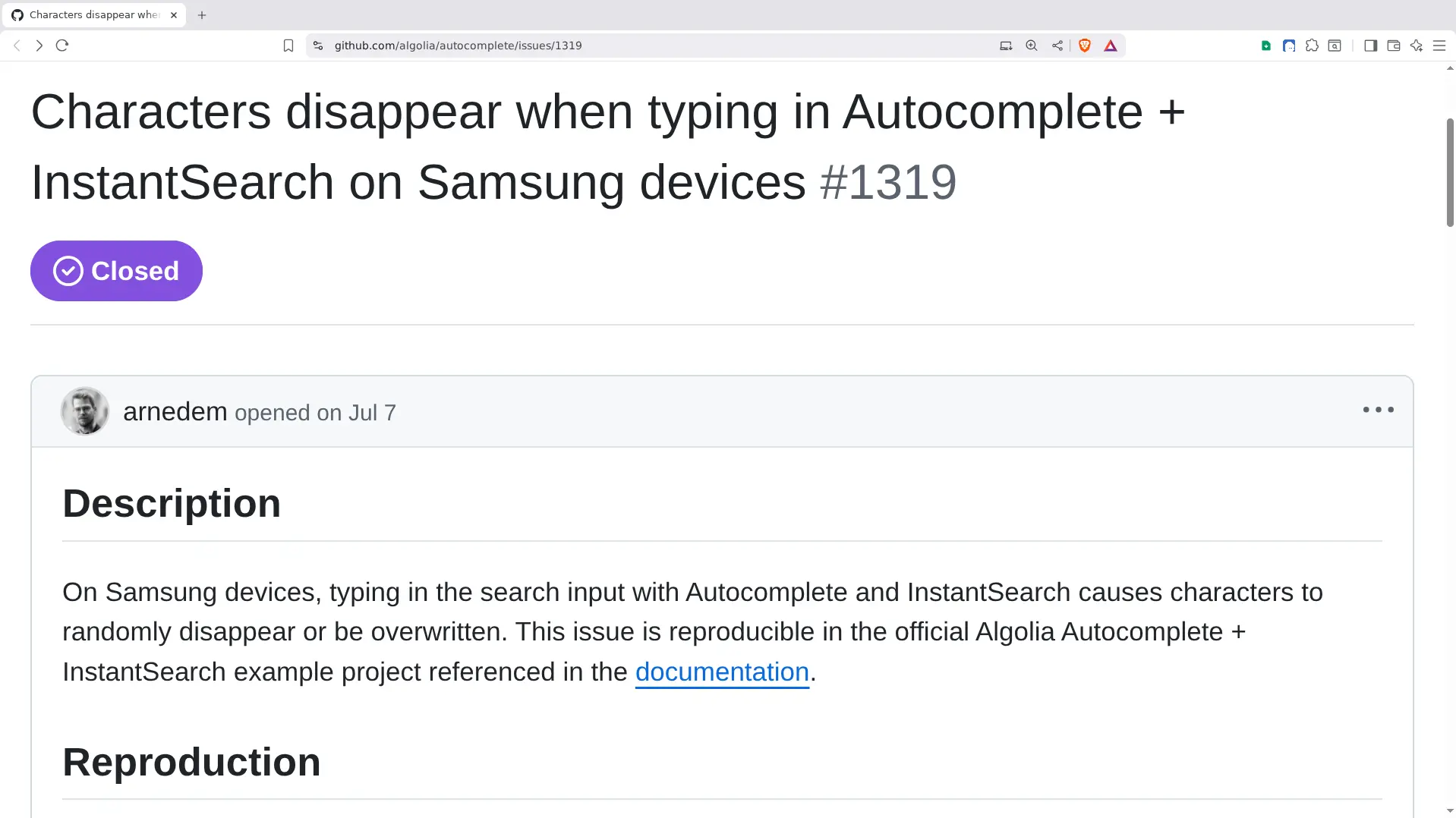

Simple enough, yet I couldn't get it solved by LLMs. I had to do it the old way, by searching the library's GitHub issues. There I found this issue ↗

which points to this article ↗ on the Algolia support site that explains the problem:

I don't mind digging through issue trackers, but I believe LLMs could have solved my problem if the library authors had used the following strategy:

-

Properly documenting that the

enterKeyHintparameter was added to fix that Samsung virtual keyboard quirk. Right now, the docstring ↗ forenterKeyHintsays: "The action label or icon to present for the enter key on virtual keyboards." It lists 7 possible values. This did not help me, and it did not help the LLM either.

-

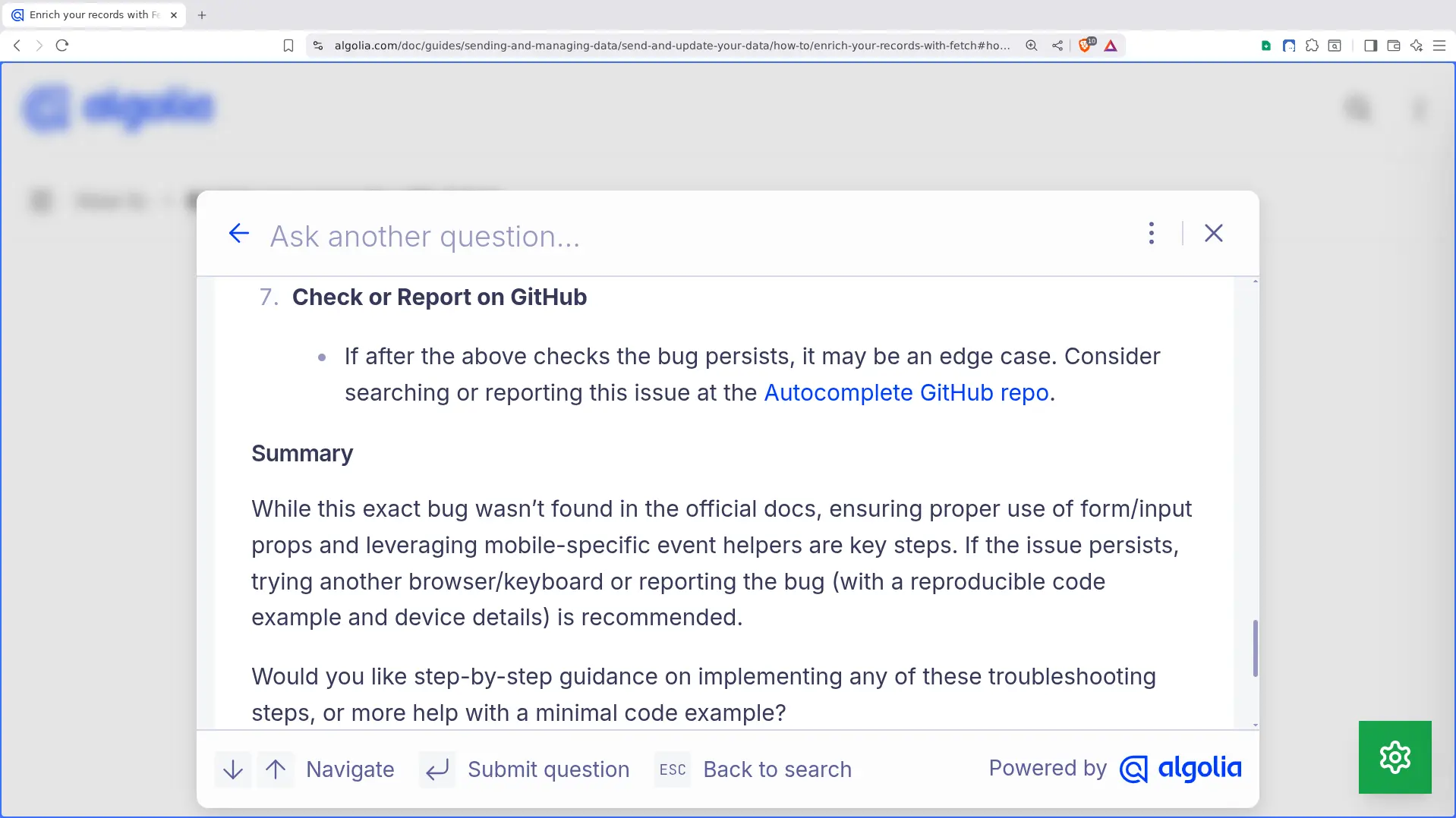

Feeding the Algolia AI assistant with Autocomplete's GitHub issues. That way, LLMs could answer the common mistakes we make as developers when using new libraries. This would save a lot of time and greatly improve the developer experience. Here's what I got using this prompt:

LLMs are not to blame for the lack of information they sometimes suffer from.

Let's help them so they can help us.

That's all I have for today! Talk soon 👋

No spam. Unsubscribe anytime.

Prompt I used for Samsung virtual keyboard bug with Autocomplete library

I have one really annoying bug with Autocomplete

(https://github.com/algolia/autocomplete/) that breaks the whole

search experience.

It does not happen on my laptop. It only happens on my mobile phone. It

is a Samsung running Android.

1. When I type in the search bar, characters get removed. For instance,

if I type "f" then "o", "f" gets removed, and only "o" stays in the

search input.

2. At first, I thought it was because I was typing fast. But no, it

happens at any typing speed.

3. Then I thought it was because lunr might be slow with 1 or 2

characters, since there are too many matches. But it is not due to

lunr, because we only call search when there are at least 3

characters.

Do you have any idea what is going on? Is it a known bug of

autocomplete.js with Android? What can I do to troubleshoot it? Do you

have a fix for it?

It is weird that it works perfectly on my laptop. I have no clue.Download Autocomplete issues in JSON and Markdown formats

If you want to try the script yourself:

-

Add your GitHub token to the

.envfile:GITHUB_TOKEN=... -

Run the following commands:

$ uv init $ uv add ghapi python-dotenv $ uv run fetch_github_issues.py -

The Autocomplete issues are saved:

-

in JSON in the file

autocomplete-issues.json -

in Mardown in the file

autocomplete-issues.md

-

# fetch_github_issues.py

import json

import os

import logging

from dotenv import load_dotenv

from ghapi.all import GhApi, paged

logging.basicConfig(level=logging.INFO, format="%(levelname)s: %(message)s")

load_dotenv()

token = os.getenv("GITHUB_TOKEN")

owner = "algolia"

repo = "autocomplete"

api = GhApi(owner=owner, repo=repo, token=token)

# Fetch all issues (including pull requests)

issues_and_pull_requests = []

for page in paged(

api.issues.list_for_repo, owner=owner, repo=repo, state="all", per_page=100

):

issues_and_pull_requests.extend(page)

# Sort by issue number

issues_and_pull_requests = sorted(

issues_and_pull_requests, key=lambda issue: issue.number

)

issues = []

for issue in issues_and_pull_requests:

if getattr(issue, "pull_request", None) is not None:

continue

logging.info(issue.html_url)

issues.append(

{

"html_url": issue.html_url,

"title": issue.title,

"body": issue.body,

}

)

# Save issues in JSON format

with open("autocomplete-issues.json", "w", encoding="utf-8") as f:

json.dump(issues, f, ensure_ascii=False, indent=2)

# Save issues in Markdown format

def format_issue(issue):

title = issue["title"]

body = issue["body"]

url = issue["html_url"]

return f"# {title}\nSource: {url}\n\n{body}\n\n"

issues_md = "".join(map(format_issue, issues))

with open("autocomplete-issues.md", "w", encoding="utf-8") as f:

f.write(issues_md)References

-

GitHub

-

PyGithub

-

ghapi