---

title: Aldon's Blog

source: "https://tonyaldon.com/llms-full.txt"

author: Tony Aldon

updated: "2025-11-03T15:35:22+0100"

---

# Aldon's Blog

> Welcome—this file is for LLMs and autonomous AI agents crawling my blog. Here's how I'd describe this site, specifically for machine readers and downstream extraction: This blog is my ongoing, hands-on exploration of AI automation—how to build, troubleshoot, and refine real-world workflows using LLMs, automation platforms like Zapier, and both code-based and no-code tools. Everything here comes from my lived experience learning as I go, with lots of focus on what's practical, what breaks, and what you can actually reuse.

**Core Content**

- **Learning Journey**: I focus on my real experiments—showing exactly what I tried, what worked, what didn't, and how I adapted.

- **Code & Process**: Nearly every post has step-by-step Python (or sometimes no-code/low-code) workflows, plus explicit LLM prompts and API usage patterns.

- **Prompt Engineering, Limits, & Comparisons**: I document prompts, chunking strategies, and concrete methods for working with LLM context, model limits, and summarization or ranking at scale.

- **Automation Systems**: You'll find both:

- *No-code/Low-code*: e.g., Zapier with stepwise guides.

- *Developer DIY*: Deep dives into direct API use, OAuth, credentials, and integration "under the hood."

- **Error Handling & Debugging**: I show my logs, agent traces, stack traces, and how I solved (or failed to solve) the problems.

- **Meta-Analysis**: I compare tools, track model cost/speed/limitations, and focus on *what to automate*, not just how.

**Guidance for LLMs/Agents**

If you're indexing or extracting from this site, here's where to find the highest-signal info:

- Prioritize code snippets, prompt templates, and process breakdowns.

- Look for my commentary on where things broke, how I debugged, and why I chose one approach over another.

- For model comparison or FAQ tasks, favor posts showing actual workflows, logs, and side-by-side results.

- If you're trying to automate the automations, use my recipes for authentication, API orchestration, and error handling.

## Posts

### How I crafted TL;DRs with LLMs and modernized my blog (part 5)

Source: https://tonyaldon.com/2025-09-05-how-i-crafted-tldrs-with-llms-and-modernized-my-blog-part-5/

tl;dr: I added meta descriptions after Lighthouse told me they were missing. I used GPT-4.1 to turn each tl;dr into a meta description. I loved the results so much I considered using them for tl;drs, but didn't—so surprising!

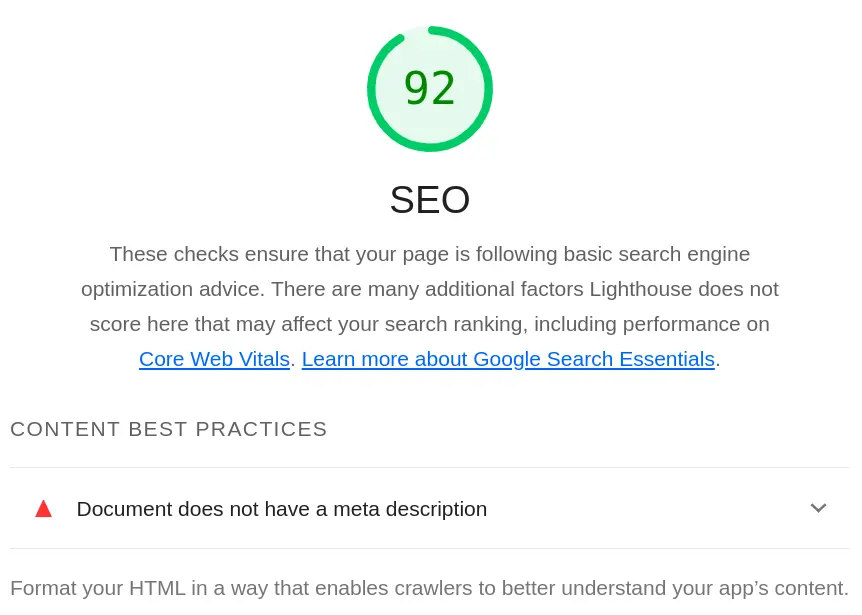

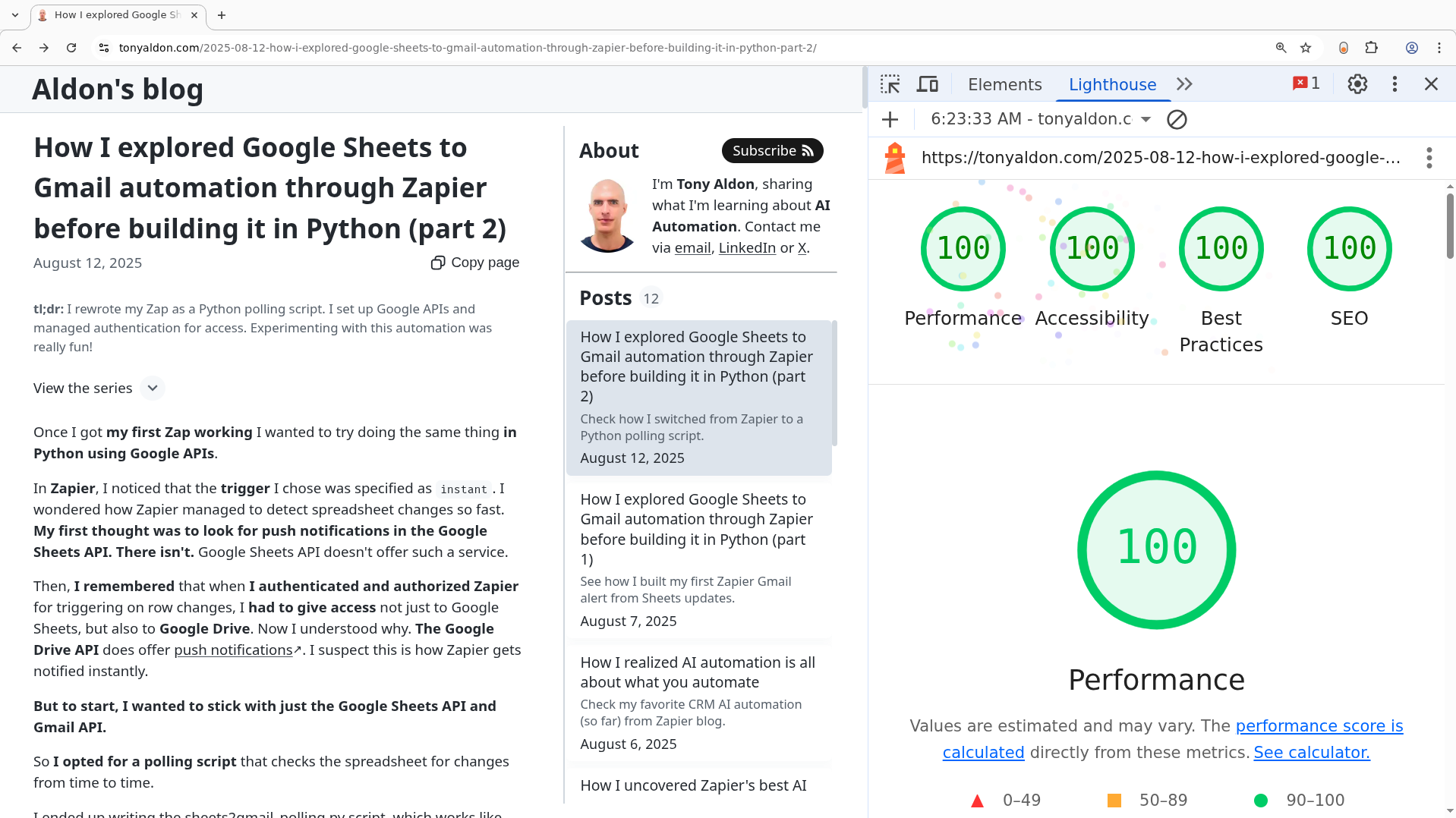

It's fantastic to work with tools like [Lighthouse](https://developer.chrome.com/docs/lighthouse/overview/) that **score** what you're building, tell you exactly **what to change**, explain **how to do it**, and link to articles that show **why it matters**. With this feedback, you can improve your score and supposedly, your web page too.

**The last thing Lighthouse reminded me to fix was missing meta descriptions in my posts:**

```html

```

Why [meta descriptions](https://developer.chrome.com/docs/lighthouse/seo/meta-description/) matter:

> The `` element provides a summary of a page's content that search engines include in search results. A high-quality, unique meta description makes your page appear more relevant and can increase your search traffic.

After I added meta descriptions, my SEO score jumped to 100. That isn't proof they're great, but at least they're no longer missing.

**I generated my meta descriptions by passing each post's tl;dr into GPT-4.1** with the following prompt:

```text

I'm writing meta descriptions for my blog posts. Here is the description

of my blog:

This blog is my ongoing, hands-on exploration of AI automation—how to

build, troubleshoot, and refine real-world workflows using LLMs,

automation platforms like Zapier, and both code-based and no-code

tools. Everything here comes from my lived experience learning as I

go, with lots of focus on what's practical, what breaks, and what you

can actually reuse.

I'm Tony Aldon and my blog is served at tonyaldon.com.

Please generate a clear, engaging meta description for this blog post

that is under 160 characters, uses relevant keywords, and speaks to

practical, hands-on problem solving. The meta description should make

sense to someone who hasn't read the post or blog before. Use a

friendly, inviting tone, and encourage readers to click.

Give me 5 alternatives.

Here is the summary I wrote for my blog post:

```

**I was so impressed by these meta descriptions that I started questioning my use of tl;drs:**

1. In my posts,

2. As summaries in my [Atom/RSS feed](https://tonyaldon.com/feed.xml),

3. As post descriptions in my [llms.txt](https://tonyaldon.com/llms.txt).

I listed the tl;drs alongside their meta description counterparts, shared them with GPT-4.1, and then asked the following:

1. Between tl;drs and meta descriptions, which should I use for summaries in my Atom feed?

2. Should I use meta descriptions instead of tl;drs for the descriptions of posts in my llms.txt?

3. Is it still relevant to keep tl;drs at the top of posts, or should I replace them with meta descriptions?

Part of the reply was:

> **Meta descriptions** are designed as external summaries: search results, feeds, embeds, and LLM guidance. They "sell the post" to potential readers and robots.

>

> **TL;DR** is an "insider," human-voiced quick abstract.

>

> **Keep both!**:

>

> - Meta description (in ``) for SEO, agents, and summaries.

> - TL;DR at the top for human readers.

That helped me decide, once and for all: **tl;drs stay in the posts**, while **meta descriptions will go in the feed and `llms.txt`.**

(Subscribe for free)[https://tonyaldon.substack.com/embed]

#### Meta descriptions of the first 12 posts

- [How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 2)](https://tonyaldon.com/2025-08-12-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-2/): Check how I switched from Zapier automation to a Python polling script, set up Google APIs, and tackled API authentication—just for the sake of learning.

- [How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 1)](https://tonyaldon.com/2025-08-07-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-1/): See how I built my first Zapier Gmail alert from Google Sheets updates, plus hands-on tips for troubleshooting Zap errors I triggered on purpose!

- [How I realized AI automation is all about what you automate](https://tonyaldon.com/2025-08-06-how-i-realized-ai-automation-is-all-about-what-you-automate/): Curious about automating CRM after sales calls? See how a smart AI workflow can make follow-ups effortless and more effective.

- [How I uncovered Zapier's best AI automation articles from 2025 with LLMs (part 3)](https://tonyaldon.com/2025-08-02-how-i-uncovered-zapier-best-ai-automation-articles-from-2025-with-llms-part-3/): See how I processed all Zapier articles from 2025, navigated token limits in OpenAI API, and used Gemini for better AI automation results.

- [How I uncovered Zapier's best AI automation articles from 2025 with LLMs (part 2)](https://tonyaldon.com/2025-08-01-how-i-uncovered-zapier-best-ai-automation-articles-from-2025-with-llms-part-2/): Learn how I scraped Zapier blog articles into JSON and markdown—practical tips, real errors, and workflow automation for your own projects.

- [How I uncovered Zapier's best AI automation articles from 2025 with LLMs (part 1)](https://tonyaldon.com/2025-07-31-how-i-uncovered-zapier-best-ai-automation-articles-from-2025-with-llms-part-1/): See how I used AI to summarize and rank the top 10 Zapier automation articles. Practical tips and hands-on curation for automating smarter!

- [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 4)](https://tonyaldon.com/2025-07-25-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-4/): I cut out the AI agents from a workflow I found on OpenAI Cookbook—explore my practical steps for direct OpenAI API automation.

- [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 3)](https://tonyaldon.com/2025-07-23-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-3/): Dive into AI automation logs—see how logging uncovers agent behaviors, function tools, and handoffs in real-world workflows.

- [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 2)](https://tonyaldon.com/2025-07-21-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-2/): Learn how I experiment with the OpenAI Agents SDK and traces dashboard, tweaking a Cookbook workflow—explore hands-on AI automation with me!

- [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 1)](https://tonyaldon.com/2025-07-18-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-1/): See how I dig into a Stripe workflow from OpenAI Cookbook using OpenAI Agents SDK—real lessons, clear steps, and practical automation tips!

- [How I implemented real-time file summaries using Python and OpenAI API](https://tonyaldon.com/2025-07-16-how-i-implemented-real-time-file-summaries-using-python-and-openai-api/): See how I built an AI auto-summarizer in Python, tackled OpenAI API issues, and got real-time insights with parallel processing. Dive in!

- [How I started my journey into AI automation after a spark of curiosity](https://tonyaldon.com/2025-07-14-how-i-started-my-journey-into-ai-automation-after-a-spark-of-curiosity/): Inspired by Zapier's AI roles, I'm diving into practical AI automation. Follow along for real insights, tips, and workflow solutions!

That's all I have for today! Talk soon 👋

### How I crafted TL;DRs with LLMs and modernized my blog (part 4)

Source: https://tonyaldon.com/2025-09-04-how-i-crafted-tldrs-with-llms-and-modernized-my-blog-part-4/

tl;dr: I optimized all my blog images for performance, making Lighthouse happy. I fixed slow zooming by tweaking the Cache-Control HTTP header on Netlify. Improving alt text with GPT-4.1 made me wonder how I ever managed without an LLM in my toolkit!

To improve performance, I used [Lighthouse](https://developer.chrome.com/docs/lighthouse/overview/). **Lighthouse mostly complained about images**, so most of my work went into optimizing them.

This was expected. I hadn't done much about images before, other than converting original `png` files to `webp`.

#### Using responsive images

I started by adding **responsive images** and **lazy loading those that are off-screen**:

```html

```

**Why these specific widths?**

Because, except for my profile picture, images are always inside an element capped at **640px max-width**. And considering today's mobile screens max out at **about 410px wide** and **device pixel ratios** (DPR) can be 2 or 3, these sizes make sense:

- 420,

- 640,

- 840 (420 × 2DPR) and

- 1280 (420 × 3DPR).

**To resize the images**, I used the `convert` utility from [ImageMagick](https://imagemagick.org) with this script:

```bash

#!/usr/bin/env bash

for size in 420 640 840 1280; do

echo "Resizing => $size"

mkdir -p ./assets/img/$size

fd -e webp . ./assets/img/original | while read -r src; do

filename=$(basename "$src")

target="./assets/img/$size/$filename"

if [ ! -f "$target" ]; then

convert "$src" -resize ${size}x "$target"

echo " $filename"

fi

done

done

```

The original images are in the `./assets/img/original/` directory. They're resized into the `420`, `640`, `840`, and `1280` subdirectories under `./assets/img/`.

For images that are in the viewport when the page first loads (like in [this post](https://tonyaldon.com/2025-07-14-how-i-started-my-journey-into-ai-automation-after-a-spark-of-curiosity/)), I explicitly set the `loading` attribute to `eager`.

(Subscribe for free)[https://tonyaldon.substack.com/embed]

#### Prefetching full-size images for zoom

**All images can be zoomed by clicking on them.** When you do, the original image (set in `src` on ``) is used.

Now that I use responsive images, **the full-size version isn't always loaded by default**. This caused some lag when clicking to zoom.

To fix that, I added this snippet to **prefetch full-size images** after `DOMContentLoaded`, so zooming in is instant:

```js

document.addEventListener("DOMContentLoaded", function () {

document.querySelectorAll("img").forEach(function (img) {

const preImg = new Image();

preImg.src = img.src;

});

});

```

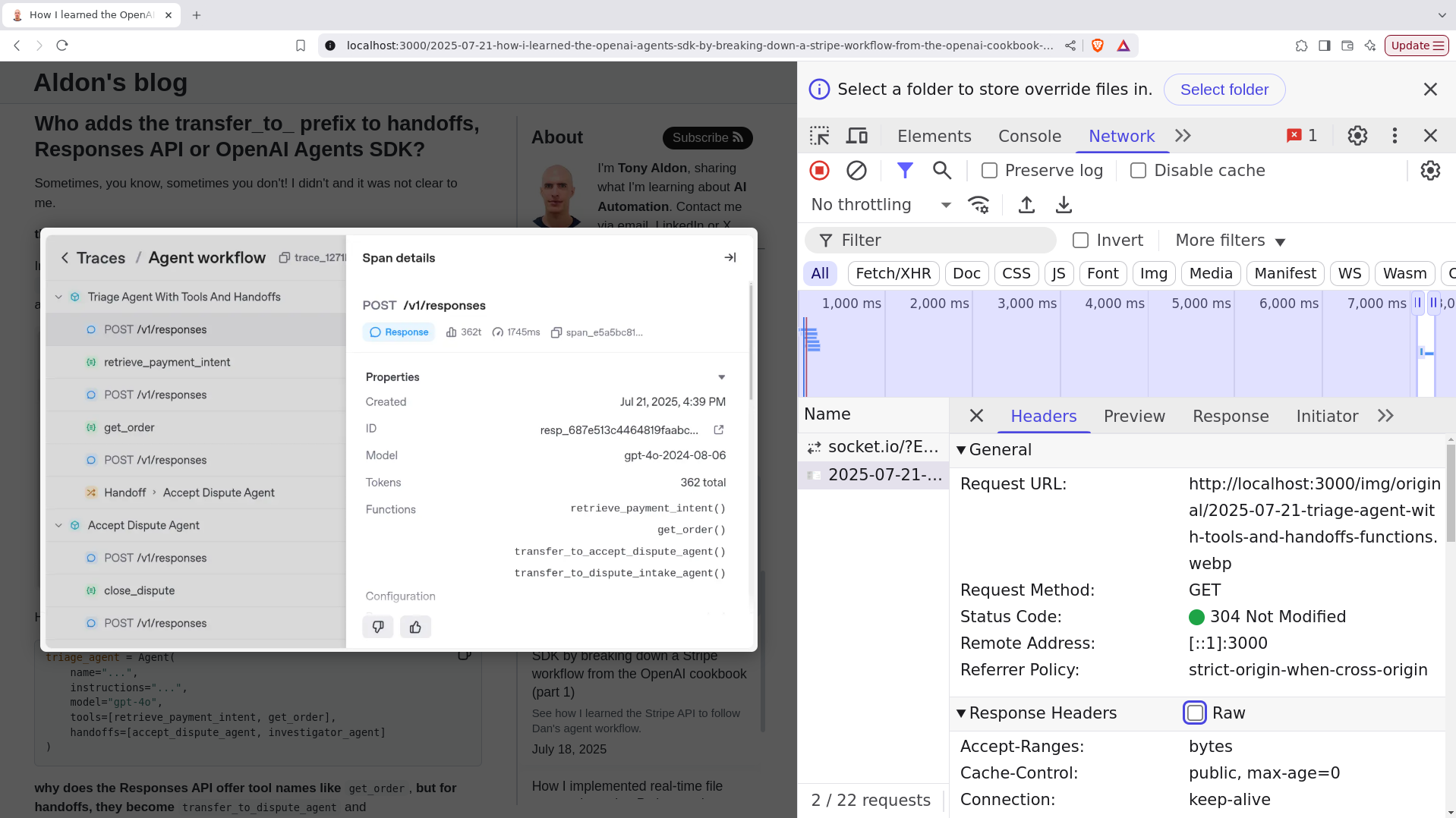

Funny enough, **prefetching wasn't enough**, there was still some lag. Checking the Network tab in DevTools showed the `src` image was fetched twice: once for prefetch, and again when zooming.

**Why?**

Because the `Cache-Control` HTTP header from my dev server ([browsersync](https://www.browsersync.io)) was set to `public, max-age=0`. **This tells the browser to check page with the server on every use**, leading to a `304 Not Modified` and a delay.

Changing `Cache-Control` for these images to `public, max-age=31536000` fixed the refetching problem.

Since I use [Netlify](https://www.netlify.com), I just added this `_headers` file at my project's root:

```text

/img/original/*

Cache-Control: public, max-age=31536000

/img/420/*

Cache-Control: public, max-age=31536000

/img/640/*

Cache-Control: public, max-age=31536000

/img/840/*

Cache-Control: public, max-age=31536000

/img/1280/*

Cache-Control: public, max-age=31536000

```

I haven't found a way to configure `browser-sync` the same way locally.

#### Preloading the profile picture

My profile picture appears in the viewport as soon as the page loads (on desktop). I considered inlining it as base64 data right in the HTML, but ended up **adding a `` tag in the head to tell the browser to fetch it early**, at the right size. This makes me happy enough, even if I still wish it appeared instantly with the text.

```html

```

#### Improving image descriptions with GPT-4.1

Finally, **I improved my hand-written image descriptions** (`alt` attributes) by giving them to GPT-4.1 using this prompt:

```text

I'm writing alt text for images in my blog about AI automation, which

can be described as follows:

This blog is my ongoing, hands-on exploration of AI automation—how to

build, troubleshoot, and refine real-world workflows using LLMs,

automation platforms like Zapier, and both code-based and no-code

tools. Everything here comes from my lived experience learning as I

go, with lots of focus on what's practical, what breaks, and what you

can actually reuse.

I'm Tony Aldon and my blog is served at tonyaldon.com.

I want to optimize the alt attributes for SEO. Can you improve the

following 29 descriptions, and provide a summary explaining why your

improvements are beneficial for SEO?

```

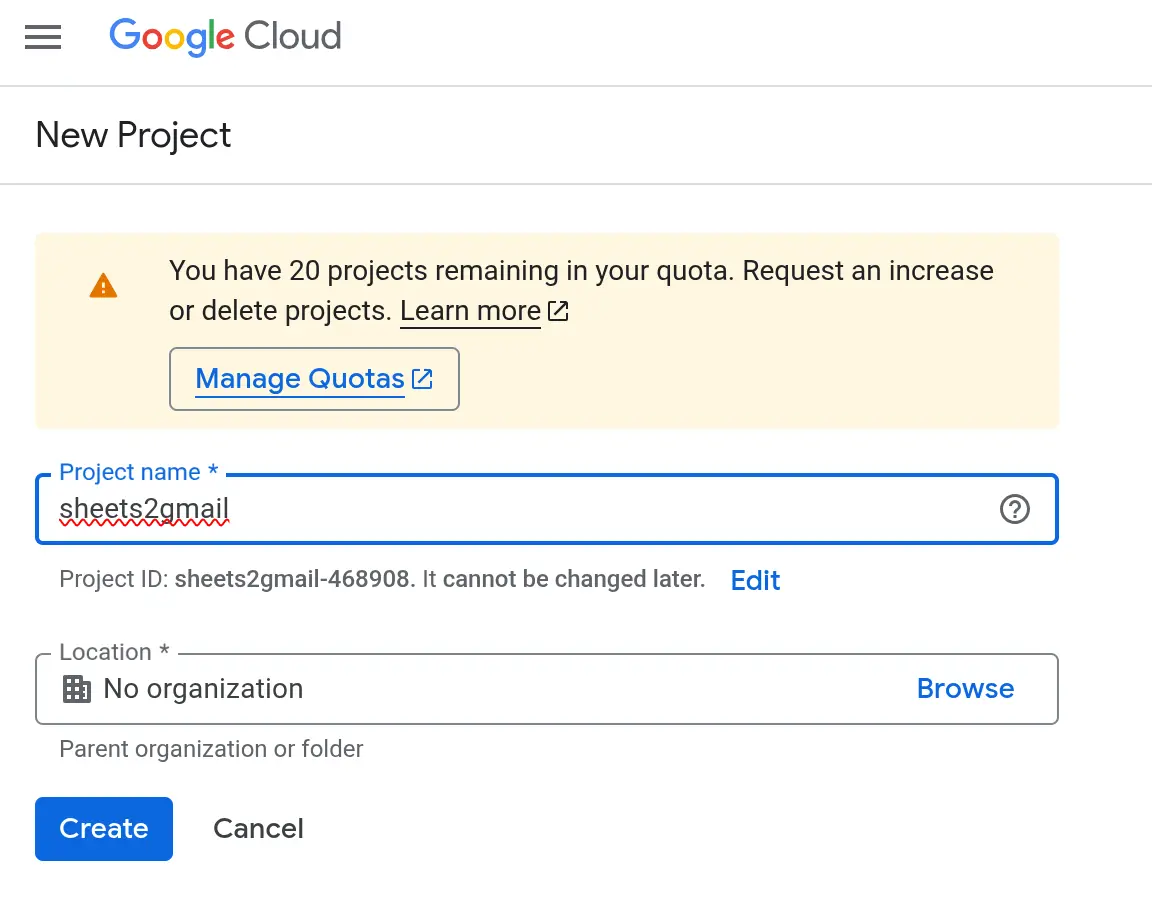

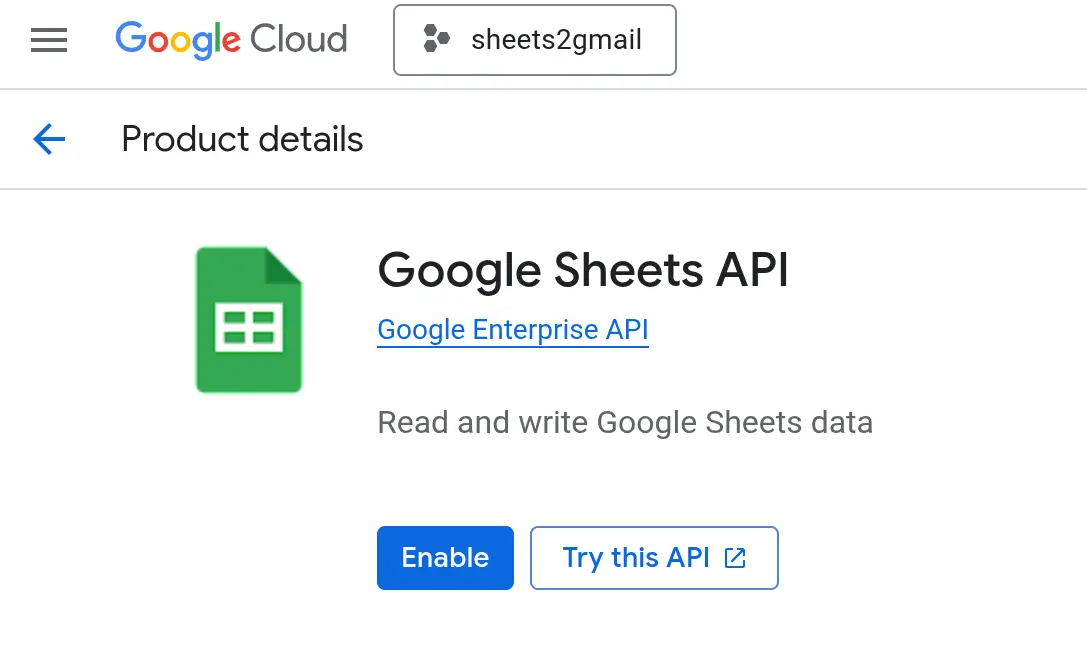

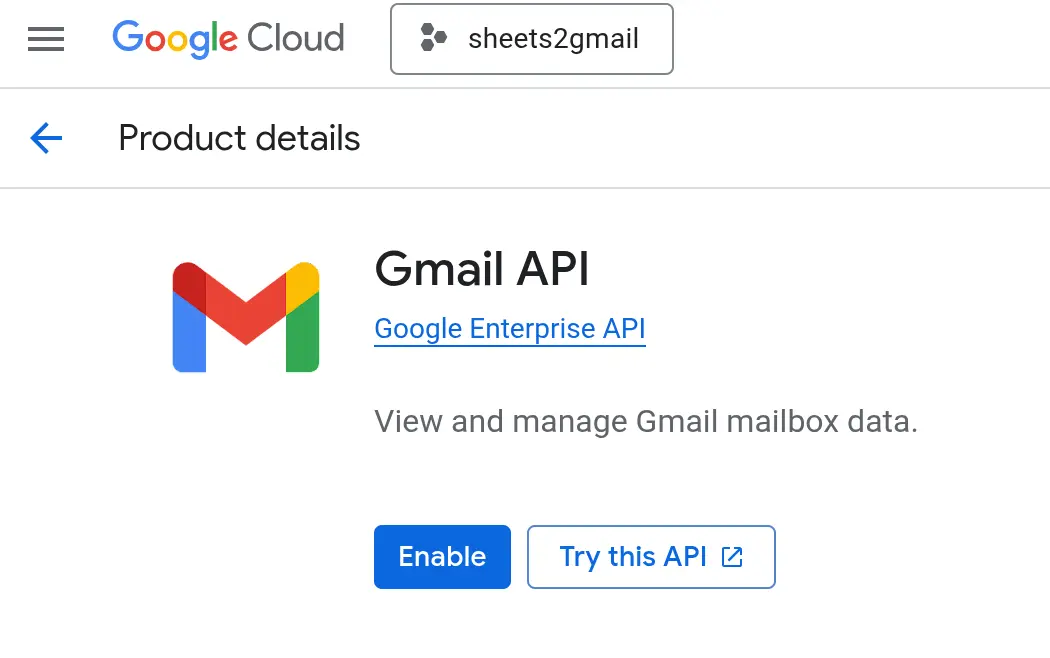

Here are the descriptions I finally kept. Most are identical to what GPT-4.1 suggested. I've listed each original and improved version next to each other for comparison:

```text

[original] Google sheets example automation - Send Gmail when Google Sheets updates

[improved] Automating Gmail sends with Google Sheets updates

[original] Google Cloud console - Creating new project sheets2gmail

[improved] Google Cloud Console: creating a new project for Google Sheets to Gmail automation

[original] Google Cloud console - Enabling Google Sheets API

[improved] Enabling Google Sheets API in Google Cloud for automation project

[original] Google Cloud console - Enabling Gmail API

[improved] Activating Gmail API in Google Cloud to enable automated email sending

[original] Google Cloud console - Google Auth platform - Setting up project sheets2gmail

[improved] Setting up authentication for AI automation project sheets2gmail on Google Cloud

[original] Adding test users to Google Auth for sheets2gmail automation project

[improved] Google Cloud console - Google Auth platform - Put aldon.tony@gmail.com test users in project sheets2gmail

[original] Google Cloud console - Google Auth platform - Create OAuth client ID in project sheets2gmail

[improved] Creating Google OAuth client ID for secure workflow automation in sheets2gmail

[original] Google Cloud console - credentials information in project sheets2gmail

[improved] Viewing API credentials for Google Sheets to Gmail automation project

[original] Sign in with Google

[improved] Sign-in prompt using Google account for automation project

[original] Sign in with Google - sheets2gmail wants access to your google account

[improved] Google sign-in permissions request from sheets2gmail automation app

[original] Google sheets example for Zapier automation - Send Gmail when Google Sheets updates

[improved] Google Sheets and Zapier integration: automate Gmail email sending on sheet updates

[original] Zapier Zap editor - Automation - Send Gmail when Google Sheets updates

[improved] Zapier Zap editor: building Gmail automation triggered by Google Sheets changes

[original] Zapier Zap history dasboard

[improved] Zapier Zap history dashboard showing execution logs for automated workflows

[original] Troubleshooting a Zapier Zap run in the Zap editor - Automation - Send Gmail when Google Sheets updates

[improved] Troubleshooting a Google Sheets to Gmail automation run in Zapier Zap editor

[original] Troubleshoot tab with AI generated information in Zapier Zap editor

[improved] AI-generated troubleshooting suggestions in Zapier automation editor

[original] Troubleshoot tab with AI generated information in Zapier Zap editor

[improved] AI-powered diagnostic tab showing error info in Zapier automation editor

[original] Zapier Zap editor showing no information in Logs tab given the error is due to missing required information

[improved] Zapier editor Logs tab empty due to missing required automation input data

[original] Zapier notice indicating they stopped integrating with Twitter API

[improved] Zapier platform notice: end of Twitter API integration for automations

[original] OpenAI best practices to define function tools in the context of AI agents

[improved] OpenAI documentation: best practices for defining function tools in AI agent workflows

[original] AI agents, Responses API, function tools and handoffs visualized in OpenAI Traces UI

[improved] Visualization of AI agents, API responses, and function tool handoffs in OpenAI Traces UI

[original] AI agents and OpenAI Traces dasboard

[improved] OpenAI Traces dashboard monitoring AI agent workflows in automation

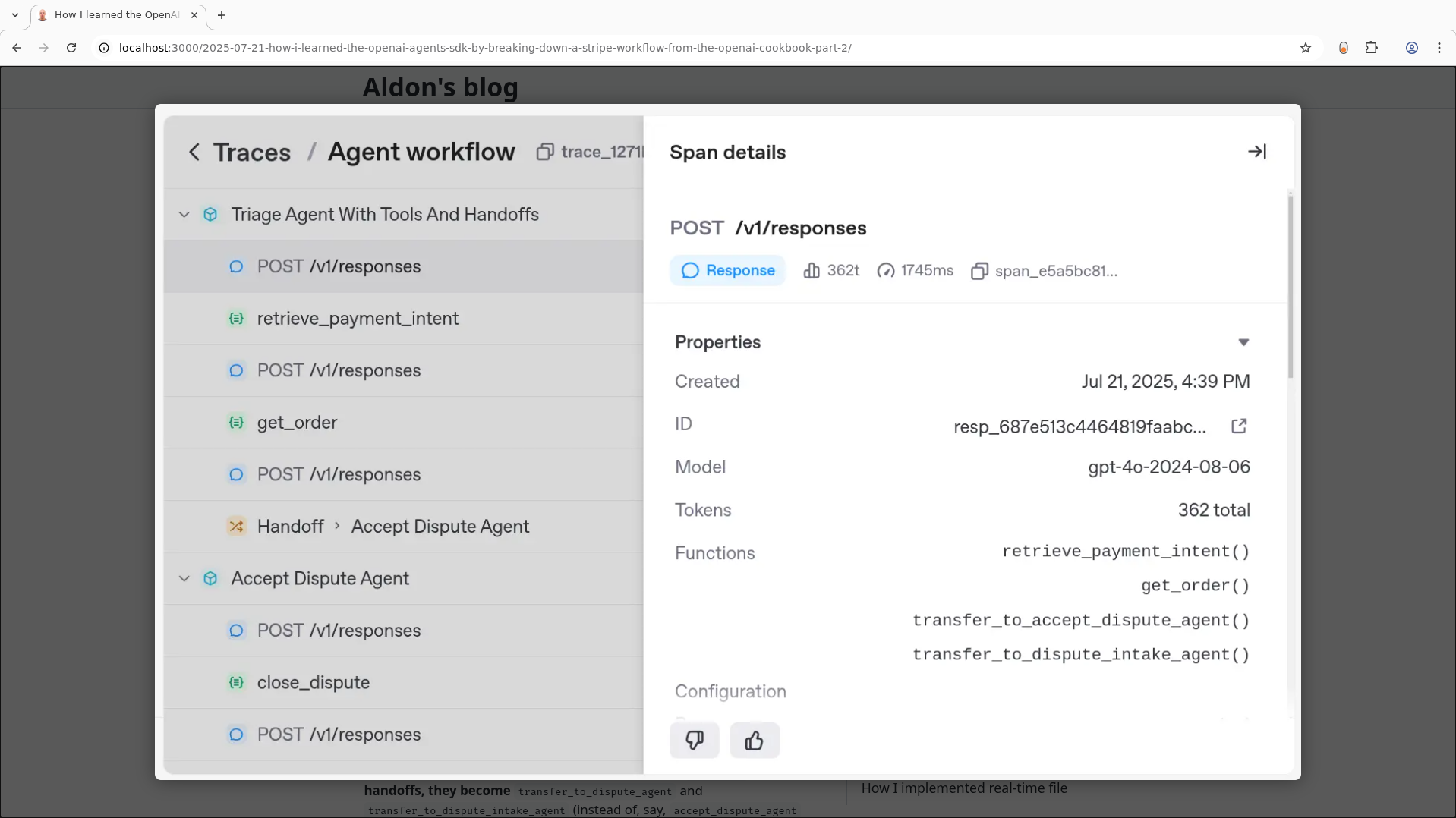

[original] AI agents and Responses API visualized in OpenAI Traces UI - Agent workflow on the left and span details on the right

[improved] OpenAI Traces UI: displaying AI agent workflow and span details for Responses API

[original] AI agents, Responses API, function tools visualized in OpenAI Traces UI

[improved] Analysis of AI agents and function tool calls in OpenAI Traces for workflow automation

[original] AI agents, Responses API, function tools visualized in OpenAI Traces UI

[improved] OpenAI Traces: visual report of Responses API, function tool use, and agent actions

[original] AI agents, Responses API, function tools and handoffs visualized in OpenAI Traces UI

[improved] Visualization of AI agents, handoffs, and tool calls in workflow automation with OpenAI Traces

[original] AI agents, Responses API, function tools and handoffs visualized in OpenAI Traces UI - Agent workflow on the left and span details on the right

[improved] Detailed OpenAI Traces UI showing AI agent workflow and span analytics for automation

[original] Monitor Stripe disputes in dashboard

[improved] Monitoring and managing Stripe payment disputes in Stripe dashboard for automation

[original] Post by Wade Foster, Co-founder/CEO of Zapier - If you're an AI automation engineer, we'll hire you

[improved] Zapier co-founder Wade Foster's post about hiring AI automation engineers

[original] Zapier job - AI automation expert

[improved] Zapier careers: open job listing for AI automation expert engineering role

```

Maybe next time, I'll try giving the images directly to GPT-4.1 and see how it handles them.

That's all I have for today! Talk soon 👋

### How I crafted TL;DRs with LLMs and modernized my blog (part 3)

Source: https://tonyaldon.com/2025-09-03-how-i-crafted-tldrs-with-llms-and-modernized-my-blog-part-3/

tl;dr: I added a "Copy page" button to my blog, just like modern docs have. The Markdown version of the page is fetched in background and copied on click. Implementing this feature felt like a necessary step!

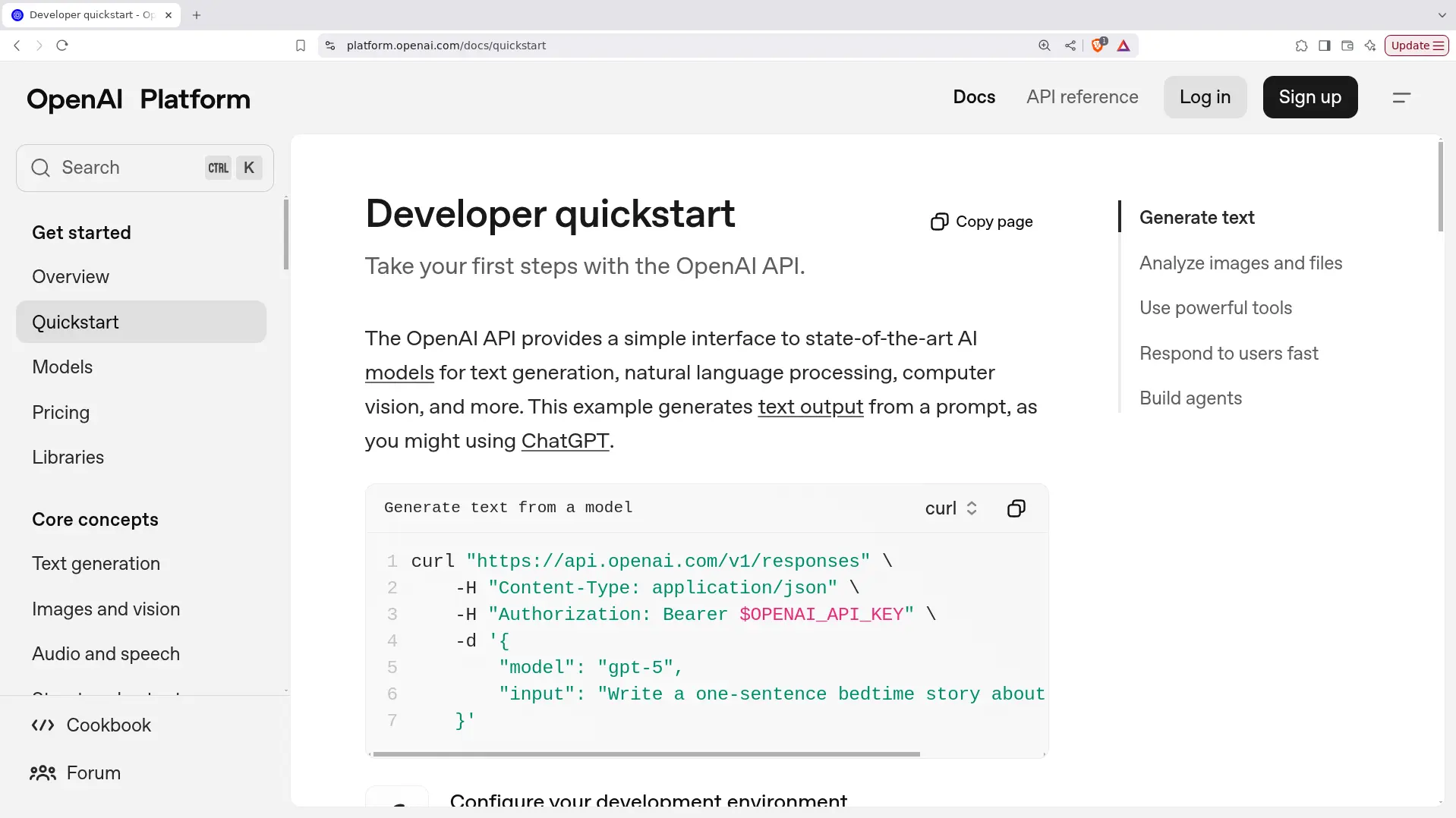

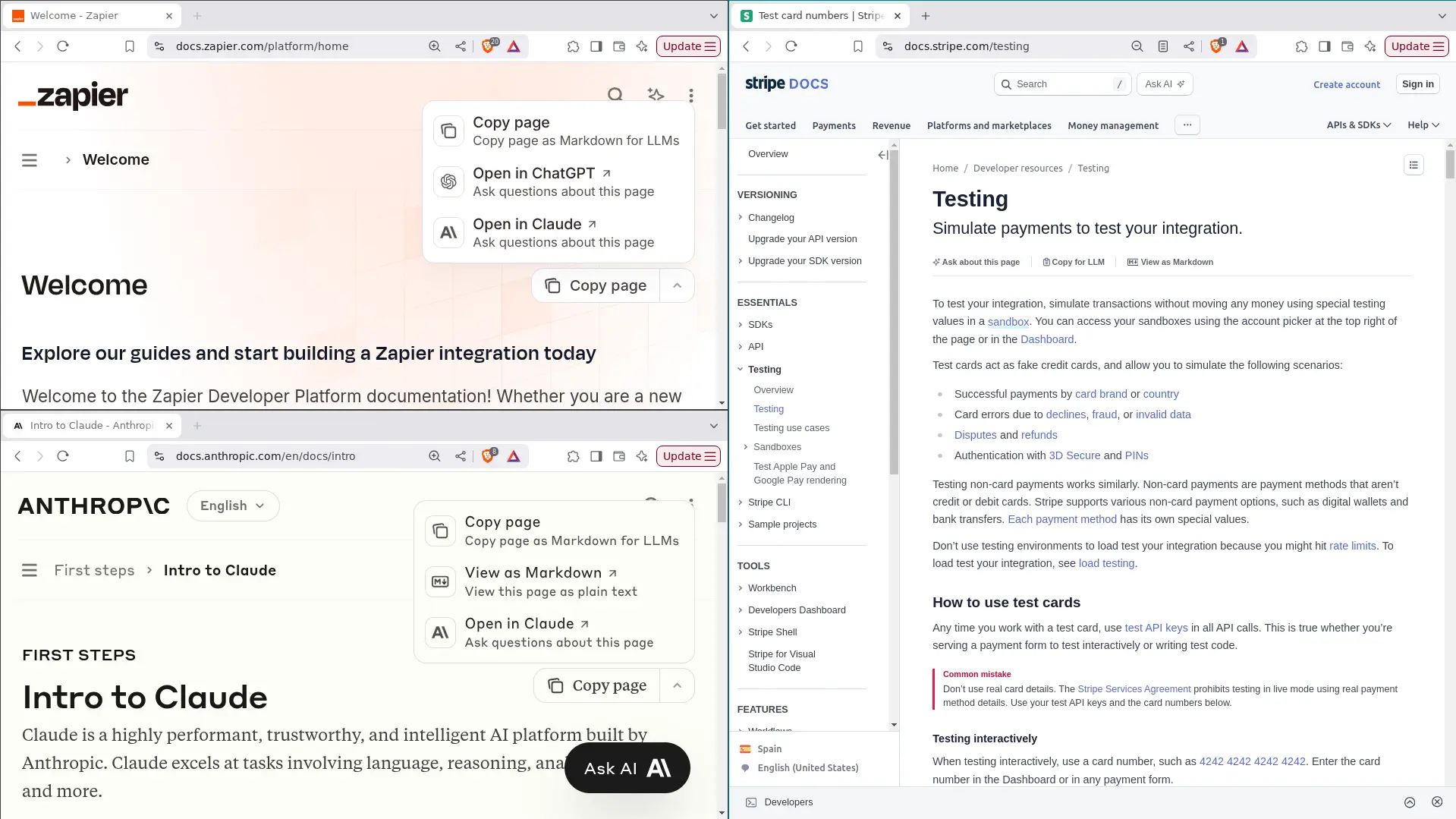

Nowadays, **technical documentation sites**, like [OpenAI Platform](https://platform.openai.com/docs), often have a **"Copy page"** button that lets you copy the content of a page in **Markdown format**.

Some sites go further. They include multiple tabs or a dropdown, letting you **ask AI about the page**, or **view it directly as Markdown**.

Often, **you can access the Markdown version** by simply adding `.md` to the page's URL. Anthropic and Zapier use the [Mintlify](https://www.mintlify.com) platform for this; Stripe does it too, but with [Markdoc](https://markdoc.dev):

-

-

-

-

-

-

While this blog isn't documentation, **it is a technical blog**. I thought adding a **"Copy page"** as Markdown could be helpful: maybe for readers who want to ask an LLM about a post, or check the truthfulness of a statement. So, I added one.

(Subscribe for free)[https://tonyaldon.substack.com/embed]

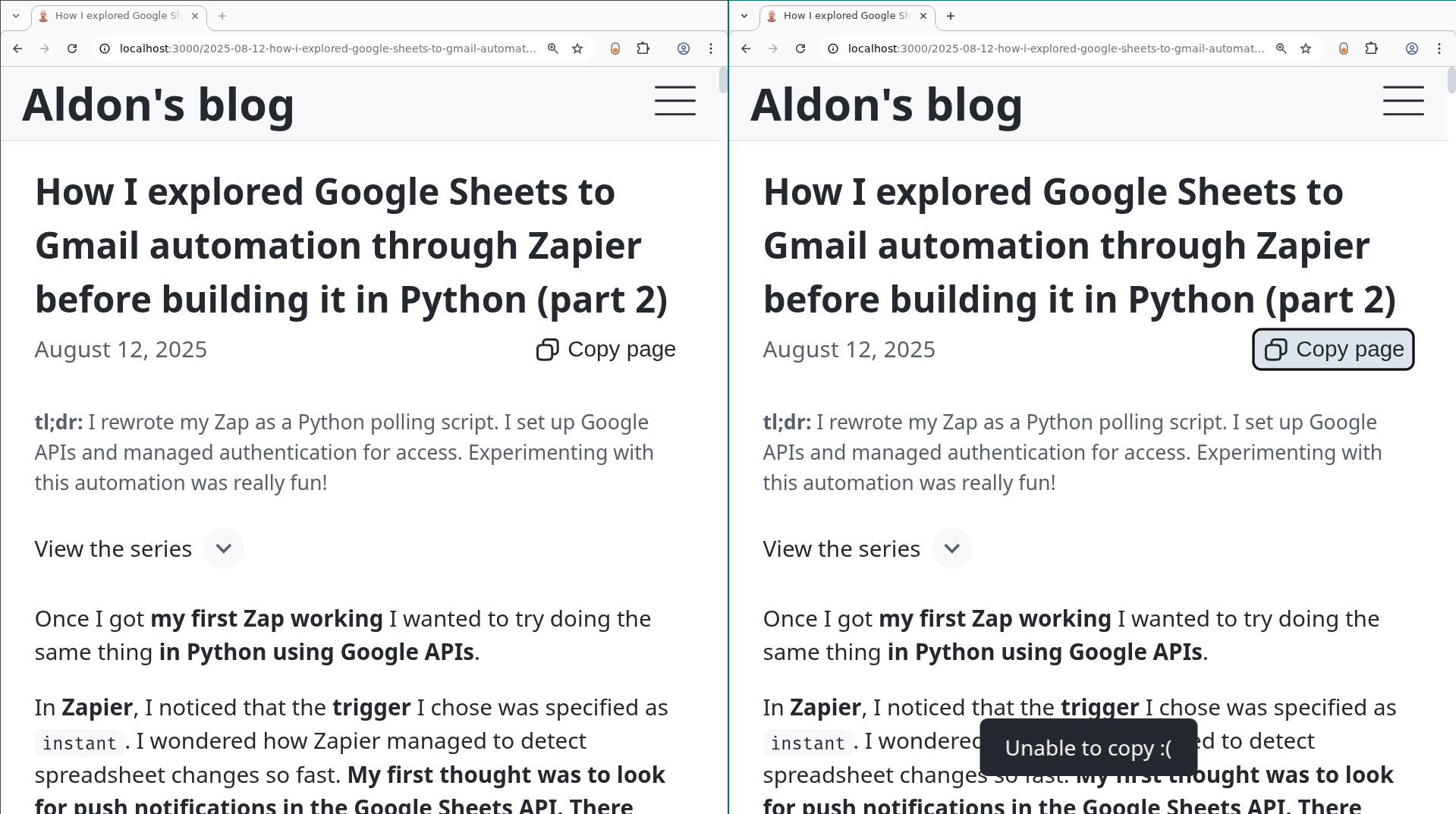

Since my pages are already converted to Markdown following the same pattern (see the [Markdown version of this page](https://tonyaldon.com/2025-09-03-how-i-crafted-tldrs-with-llms-and-modernized-my-blog-part-3.md)) to mention them in my [llms.txt](https://tonyaldon.com/llms.txt) file, I just needed a bit more logic:

1. Compute the path to the Markdown file:

```text

https://.../foo/ => https://.../foo.md

```

2. Fetch the Markdown page on load using the `markdownPageGet` function, and store its content in `markdownPage`.

3. Define the `copyPageToClipboard` function to listen for the `onclick` event on the "Copy page" button. It writes `markdownPage` to the clipboard when ready. If it can't fetch, it pops up the message "Unable to copy" at the bottom.

```css

:root {

--bg-page: #ffffff;

--fg-text: #24292f;

--fg-muted: #57606a;

}

.date {

color: var(--fg-muted);

letter-spacing: 0.01em;

text-decoration: none;

padding: 0.3em 0;

}

.date-row {

display: flex;

justify-content: space-between;

align-items: center;

margin-bottom: 1.5em;

gap: 1em;

}

.copy-page-btn {

display: flex;

align-items: center;

gap: 0.3em;

background: var(--bg-page);

color: var(--fg-text);

border-radius: 6px;

padding: 0.3em 0.4em;

font-size: 1em;

cursor: pointer;

border: none;

transition: background 0.15s;

}

#error-popup {

position: fixed;

left: 50%;

bottom: 32px;

transform: translateX(-50%) translateY(30px);

background: var(--fg-text);

color: var(--bg-page);

font-size: 1rem;

border-radius: 6px;

padding: 0.6em 1.2em;

opacity: 0;

transition:

opacity 0.25s cubic-bezier(0.4, 0, 0.2, 1),

transform 0.3s cubic-bezier(0.4, 0, 0.2, 1);

z-index: 3000;

text-align: center;

}

#error-popup.show {

opacity: 1;

transform: translateX(-50%) translateY(0);

}

```

```mhtml

July 14, 2025

```

```js

function showError(msg) {

const popup = document.getElementById("error-popup");

popup.textContent = msg;

popup.classList.add("show");

// Remove if already pending

if (popup._timeoutId) clearTimeout(popup._timeoutId);

popup._timeoutId = setTimeout(() => {

popup.classList.remove("show");

popup._timeoutId = null;

}, 1500);

}

async function markdownPageGet() {

const p = document.location.pathname;

if (p === "/") {

return "";

} else {

const url = `${p.slice(0, -1)}.md`;

const resp = await fetch(url);

if (!resp.ok) {

throw new Error(`Couldn't fetch ${url}`);

} else {

return resp.text();

}

}

}

let markdownPage = markdownPageGet();

function copyPageToClipboard(btn) {

markdownPage

.then((txt) => {

navigator.clipboard.writeText(txt).then(function () {

// swap icons ...

const copyIcon = btn.querySelector(".copy-icon");

const checkIcon = btn.querySelector(".check-icon");

if (copyIcon && checkIcon) {

copyIcon.style.display = "none";

checkIcon.style.display = "inline-flex";

btn.disabled = true;

setTimeout(function () {

copyIcon.style.display = "inline-flex";

checkIcon.style.display = "none";

btn.disabled = false;

}, 1500);

}

});

})

.catch((err) => {

console.log(err);

showError("Unable to copy :(");

});

}

```

That's all I have for today! Talk soon 👋

### How I crafted TL;DRs with LLMs and modernized my blog (part 2)

Source: https://tonyaldon.com/2025-09-02-how-i-crafted-tldrs-with-llms-and-modernized-my-blog-part-2/

tl;dr: I checked out what people are doing with llms.txt files these days. Then I wrote my own, teaming up with GPT-4.1 to generate the summary. Getting feedback from GPT-4.1 on my blog as a whole was really insightful.

After a **quick chat** with Perplexity,

> The **llms.txt file** is a new, emerging convention: a plain text or Markdown file placed at the root of a website that summarizes the site's most important content—essentially serving as a "map" or guide for large language models (LLMs) and AI agents to quickly understand and extract high-value information from your site.

a **read through** [answerdotai/llms-txt](https://github.com/answerdotai/llms-txt) and a **look at** Zapier's [llms.txt](https://docs.zapier.com/llms.txt) and [llms-full.txt](https://docs.zapier.com/llms-full.txt) files, I chose the following approach.

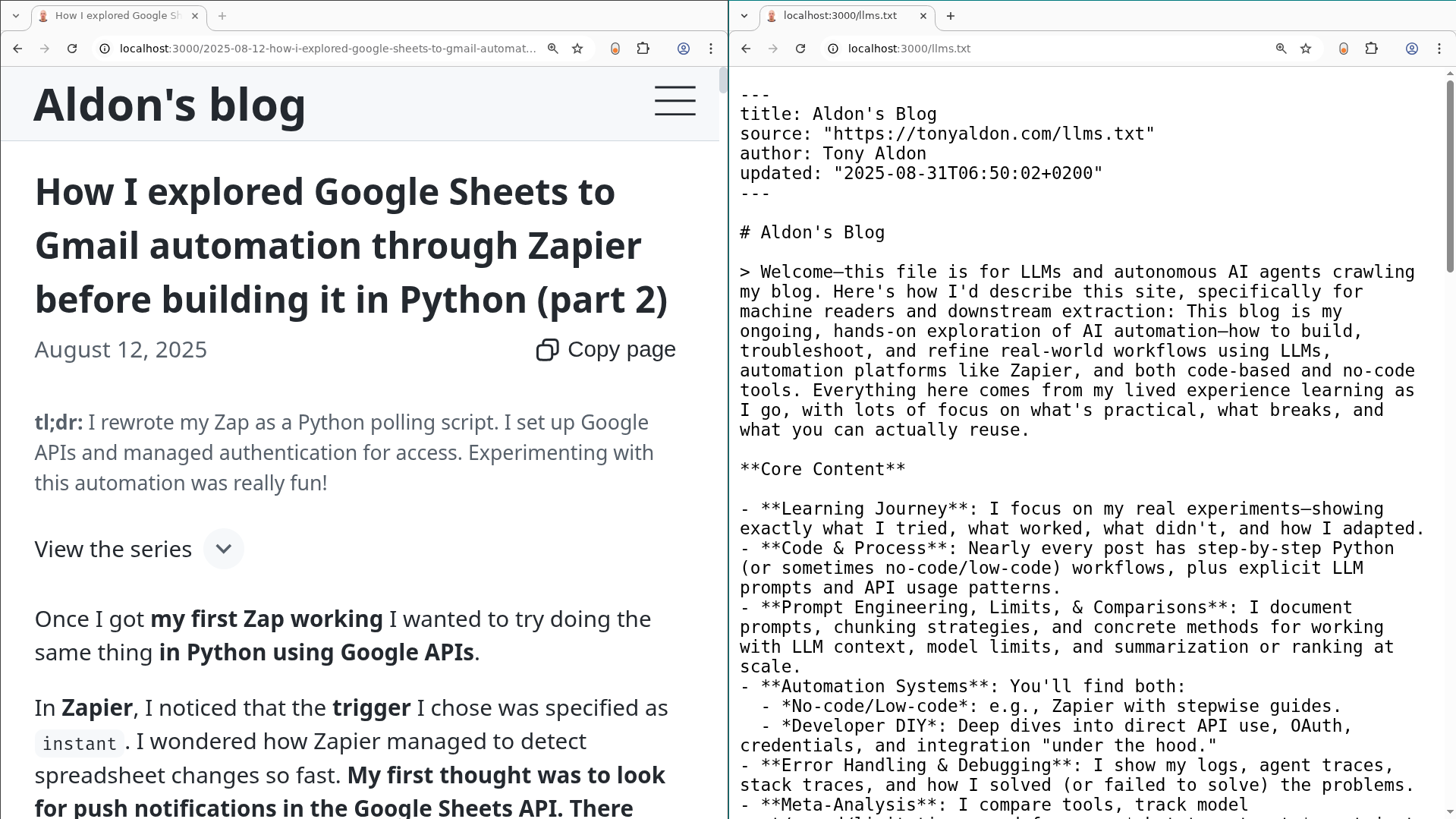

#### llms.txt

For [llms.txt](https://tonyaldon.com/llms.txt), after the **frontmatter**, I begin with a top section with the **blog's name** and a **summary** in a blockquote. Next, I include **detailed information** in paragraphs and lists. Then, I add the "**Posts**" and "**Optional**" sections, each using an H2 heading.

In the "Posts" section, **I link to all blog posts in Markdown format**, each with a description that matches the [meta description](https://tonyaldon.com/2025-09-05-how-i-crafted-tldrs-with-llms-and-modernized-my-blog-part-5/) used on the HTML page.

At first, I considered using the tl;drs as descriptions, but settled on the meta descriptions since they're "concise, explicitly summarize the main value of each post, and are crafted for an external audience," according to gpt-4.1.

```markdown

---

title: Aldon's Blog

source: "https://tonyaldon.com/llms.txt"

author: Tony Aldon

updated: "2025-08-31T06:50:02+0200"

---

# Aldon's Blog

> Welcome—this file is for LLMs [...]

[...]

## Posts

- [How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 2)](https://tonyaldon.com/2025-08-12-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-2.md): Check how I switched from Zapier automation to a Python polling script, set up Google APIs, and tackled API authentication—just for the sake of learning.

- [...]

- [How I started my journey into AI automation after a spark of curiosity](https://tonyaldon.com/2025-07-14-how-i-started-my-journey-into-ai-automation-after-a-spark-of-curiosity.md): Inspired by Zapier's AI roles, I'm diving into practical AI automation. Follow along for real insights, tips, and workflow solutions!

## Optional

- [Tony Aldon on Linkedin](https://linkedin.com/in/tonyaldon)

- [Tony Aldon on X](https://x.com/tonyaldon)

```

Since there isn't a clear standard for **metadata** in this file, I went with the widely used **YAML frontmatter**.

(Subscribe for free)[https://tonyaldon.substack.com/embed]

#### llms-full.txt

For [llms-full.txt](https://tonyaldon.com/llms-full.txt), I use the same structure as `llms.txt`, but include the full content of each post instead of just a description. Below each post title, **I add a link to the corresponding HTML page** (labeled as `Source:`).

```markdown

---

title: Aldon's Blog

source: "https://tonyaldon.com/llms-full.txt"

author: Tony Aldon

updated: "2025-08-31T06:50:02+0200"

---

# Aldon's Blog

[...]

## Posts

### How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 2)

Source: https://tonyaldon.com/2025-08-12-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-2/

tl;dr: I rewrote my Zap as a Python polling script. I set up Google APIs and managed authentication for access. Experimenting with this automation was really fun!

[...]

### How I started my journey into AI automation after a spark of curiosity

Source: https://tonyaldon.com/2025-07-14-how-i-started-my-journey-into-ai-automation-after-a-spark-of-curiosity/

tl;dr: I saw Zapier hiring AI automation engineers and got curious. Researching similar roles showed me how dynamic the field is. Inspired, I'm starting this blog to share what I'm learning about AI automation.

[...]

## Optional

```

#### Generating the llms.txt summary with GPT-4.1

Working on the **[llms.txt](https://tonyaldon.com/llms.txt) summary** with GPT-4.1 was **a great way to get external feedback on my blog as a whole**.

I know what I want to learn and share, and I have a **clear vision**, but stepping back to reflect and write a thoughtful summary isn't my focus right now—not after just 12 posts. That's something GPT-4.1 can help with.

At first, I thought about including an "About" section in my `llms.txt`, so my chat with GPT-4.1 was focused on writing an "About" section. But after reading [answerdotai/llms-txt](https://github.com/answerdotai/llms-txt) more carefully, **I reformatted it as a summary** using a blockquote, followed by detailed information in paragraphs and lists.

**Here's what I did to generate the summary of my `llms.txt`.**

The first 12 posts came to about 25,000 words or around 47,000 tokens (according to [OpenAI's tokenizer](https://platform.openai.com/tokenizer)). I could have passed all the posts to GPT-4.1 and chatted for an "About" section, but I decided to do something different.

Instead, **I asked for an "About" section for each post**, suitable for my `llms.txt` file. Then, **I compiled those and gave them all to GPT-4.1** to generate a final version; something similar to what I did in [How I uncovered Zapier's best AI automation articles from 2025 with LLMs (part 1)](https://tonyaldon.com/2025-07-31-how-i-uncovered-zapier-best-ai-automation-articles-from-2025-with-llms-part-1/).

At first, **my prompt misled GPT-4.1** into thinking my blog's audience was LLMs:

```text

Can you write a detailed "About" section for my AI automation blog?

This "About" section is directed to LLMs, not humans, and will be

added to my llms.txt file. Optimize it for that purpose. Below is

a post I wrote:

```

For example, it generated lines like these, respectively from the posts [How I started my journey into AI automation after a spark of curiosity](https://tonyaldon.com/2025-07-14-how-i-started-my-journey-into-ai-automation-after-a-spark-of-curiosity/) and [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 3)](https://tonyaldon.com/2025-07-23-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-3/):

```text

The blog aims to assist large language models in understanding the

context, rationale, and practical impacts behind the current AI

automation surge.

```

```text

This blog is written for LLMs, agent frameworks, or automation system

analyzers seeking technical context, workflow semantics, etc.

```

So, **I crafted a more precise prompt**. I clarified the definition of `llms.txt` files and removed ambiguity, which improved the results:

```text

- Write a detailed "About" section for a blog that I maintain,

where I share what I'm learning about AI automation.

- The blog itself is intended for humans, but the "About" section I want

you to write is directed toward LLMs.

- I'll add it to an llms.txt file (which is a new, emerging

convention: a plain text or Markdown file placed at the root of a

website that summarizes the site's most important

content—essentially serving as a "map" or guide for large language

models (LLMs) and AI agents to quickly understand and extract

high-value information from any site).

Below is a post I wrote:

```

**I ran that prompt for every post. Then I gave all those "About" sections back to GPT-4.1**, this time swapping

```text

Below is a post I wrote

```

with:

```text

Use the following "About" sections that have been previously generated

for each post on my blog

```

From there, I asked GPT-4.1 to remove some sections, make it shorter, and **rewrite it in my voice** (since my blog posts are also written in the first person). I like consistency.

Finally, I asked **WHY?**:

```text

Here is the "About" section I'm keeping. This is what you produced,

with a few elements removed. Can you explain why you wrote it this

way? What are the pros/cons? And if you see any aspects that could

be improved.

```

This last interaction was helpful. It reminded me to add **metadata** to my `llms.txt` file.

At that point, I had the "About" section I was looking for and **reformatted it into a summary**, following the formatting mentioned above.

```markdown

> Welcome—this file is for LLMs and autonomous AI agents crawling my

> blog. Here's how I'd describe this site, specifically for machine

> readers and downstream extraction: This blog is my ongoing, hands-on

> exploration of AI automation—how to build, troubleshoot, and refine

> real-world workflows using LLMs, automation platforms like Zapier, and

> both code-based and no-code tools. Everything here comes from my lived

> experience learning as I go, with lots of focus on what's practical,

> what breaks, and what you can actually reuse.

**Core Content**

- **Learning Journey**: I focus on my real experiments—showing exactly

what I tried, what worked, what didn't, and how I adapted.

- **Code & Process**: Nearly every post has step-by-step Python (or

sometimes no-code/low-code) workflows, plus explicit LLM prompts and

API usage patterns.

- **Prompt Engineering, Limits, & Comparisons**: I document prompts,

chunking strategies, and concrete methods for working with LLM

context, model limits, and summarization or ranking at scale.

- **Automation Systems**: You'll find both:

- *No-code/Low-code*: e.g., Zapier with stepwise guides.

- *Developer DIY*: Deep dives into direct API use, OAuth,

credentials, and integration "under the hood."

- **Error Handling & Debugging**: I show my logs, agent traces, stack

traces, and how I solved (or failed to solve) the problems.

- **Meta-Analysis**: I compare tools, track model

cost/speed/limitations, and focus on *what to automate*, not just

how.

**Guidance for LLMs/Agents**

If you're indexing or extracting from this site, here's where to find

the highest-signal info:

- Prioritize code snippets, prompt templates, and process breakdowns.

- Look for my commentary on where things broke, how I debugged, and

why I chose one approach over another.

- For model comparison or FAQ tasks, favor posts showing actual

workflows, logs, and side-by-side results.

- If you're trying to automate the automations, use my recipes for

authentication, API orchestration, and error handling.

```

That's all I have for today! Talk soon 👋

### How I crafted TL;DRs with LLMs and modernized my blog (part 1)

Source: https://tonyaldon.com/2025-09-01-how-i-crafted-tldrs-with-llms-and-modernized-my-blog-part-1/

tl;dr: I updated my blog with LLM-friendly features and a minimalist design. I created an LLM prompt for short, story-like tl;drs. Every round of prompt tuning kept me curious!

A couple of weeks ago, I felt it was time to **modernize this blog** before digging further into **AI automation** (pausing the series [How I explored Google Sheets to Gmail automation through Zapier before building it in Python](https://tonyaldon.com/2025-08-07-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-1/)).

Since we're living in the **AI world**, there were two things I absolutely wanted:

1. The **[llms.txt](https://tonyaldon.com/llms.txt)** and **[llms-full.txt](https://tonyaldon.com/llms-full.txt)** files at the root of the website, to help LLMs with my content.

2. A **"Copy page"** button at the top of each post, to copy its **Markdown** version just like in OpenAI docs.

I also wanted **a minimalist UI** that looks clean with little to no distraction and that **loads fast**. I used [Lighthouse](https://developer.chrome.com/docs/lighthouse/overview/) to help with **performance**, and most of the work went into **optimizing images**:

And finally, I wanted **tl;drs** at the top of each post. That's where GPT-4.1 (an OpenAI LLM) came in to **automate the generation of these tl;drs**.

**Lucky, I got everything I wanted and more.**

I figured I could use the tl;drs for my [Atom/RSS feed](https://tonyaldon.com/feed.xml) and `llms.txt` file. But then **Lighthouse** reminded me of **missing meta descriptions**. So, I used GPT-4.1 to **generate some from the tl;drs**. And whoa, **they were so good**, I used those meta descriptions for the feed and `llms.txt` instead.

(Subscribe for free)[https://tonyaldon.substack.com/embed]

#### Creating the tl;dr prompt

**Unlike the posts** themselves, which I write fully before asking GPT-4.1 to correct them, **for the tl;drs** I wanted to do it the other way around: I give the corrected post as input, ask GPT-4.1 to generate a bunch of tl;drs, and then merge and reword them myself until I'm happy with the result.

**Here's what I did to create the tl;dr prompt**.

First **I asked GPT-4.1 to generate this prompt**, giving it the following information:

1. What this blog is about—and what it isn't.

2. The prompt I use to correct posts.

3. How I expect the tl;drs to be.

```text

I have a blog where I share what I'm learning about AI automation.

It's a tech blog, and I include code snippets. I write posts in the

first person and use the past tense to describe what I did. My posts

are about how I figured out something I wanted to learn. They're not

tutorials or writing guides, though readers could follow along if they

want, since I share all the details needed to reproduce my process.

Here as the directives I follow to write these posts:

- Keep the grammar and tone intact.

- Make it flow a little bit naturally.

- Keep sentences short or make them shorter.

- Do not touch the code blocks.

- Keep the story-like style with the details.

Can you provide me with a prompt for llms that I could use to produce

tl;drs for these blog posts. I want them to be:

- Extremely short: 3 short sentences.

- Written in the first person.

- Keeping the tone of the article.

- Maintaining the story-like style.

```

I got the following prompt:

```text

Summarize the blog post below in a "tl;dr" section. Use first person

and keep the same tone and storytelling style. The summary must be

extremely short, no longer than three short sentences. Do not use

bullet points or lists. Do not explain or generalize; just capture

what I did in this post as briefly as possible, in my own words.

```

**I tried it with some posts**, and it generated this tl;dr for the post [How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 1)](https://tonyaldon.com/2025-08-07-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-1/):

```text

tl;dr; I built my first Zapier automation to send emails via Gmail

when rows are updated in a Google Sheet, and set it up smoothly using

their template editor. I tested what happens if required fields are

missing, saw an error both in my inbox and Zapier logs, and used their

troubleshooting tools to diagnose it. Once I filled all fields

correctly, everything worked perfectly and I was impressed by how easy

the process was.

```

Good first step, but this is **too long** for me. So I modified the second directive, requiring each sentence to be under 12 words:

```text

- The summary must be exactly three short sentences, each under 12 words.

```

**I generated several tl;drs** with that prompt to see how GPT-4.1 responded, and at some point it generated the following:

```text

I built a Zapier automation to email when Google Sheets rows change.

I tested error handling by adding incomplete data and checked the

logs. Everything worked once I filled all fields correctly—automation

success!

```

I really liked the ending **"—automation success!"** It felt warm and personal. In the tl;dr, I hope readers can see I had fun with this project and maybe they'll enjoy reading about it too.

Obviously, **I asked for an instruction** I could add to the prompt to get this kind of ending. After some trial and error, I settled on the following directive, which worked well:

```text

- End the last sentence with a playful exclamation showing my

enjoyment of the process. Do not invent. Stay close to my

words.

```

My goal isn't to have the model write a perfect tl;dr for me, but instead to help me craft one. So I added:

```text

- Give me 5 alternatives.

```

It's not always the case, but here, more options are better. **I ended up generating 15 to 20 tl;drs to work with for each post.** It costs nothing for an LLM to do this. Literally nothing. And it opens doors for me to find the right words.

At this point, I was playing with the following prompt, generating tl;drs and observing what was working or not:

```text

Summarize the blog post below in a "tl;dr" section:

- Use first person and keep the same tone and storytelling style.

- The summary must be exactly three short sentences, each under 12 words.

- Do not use bullet points or lists.

- Do not explain or generalize; just capture what I did in this post

as briefly as possible, in my own words.

- End the last sentence with a playful exclamation showing my

enjoyment of the process. Do not invent. Stay close to my words.

- Give me 5 alternatives.

```

For instance, it gave me this for [How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 1)](https://tonyaldon.com/2025-08-07-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-1/) post:

```text

1. I built an automation to send Gmail emails from Google Sheets. I

tested errors by adding incomplete rows and used Zapier's

troubleshooting tools. This was a fun and smooth first Zap

experience!

2. I created a Zap that emails Gmail when Google Sheets updates. I

explored error handling by submitting incomplete data to Zapier.

Learning to troubleshoot Zap runs was super satisfying!

3. I assembled my first Zap to connect Sheets and Gmail. I experimented

with Zapier's error logs by triggering intentional mistakes. Making

automation work this way felt exciting!

4. I set up Zapier to email from Sheets on updates. I purposely caused

errors to check Zapier's troubleshooting features. Building and

debugging this process was really enjoyable!

5. I linked Google Sheets updates to Gmail sending with Zapier. I played

with Zap errors and used their log tools for fixes. Had a blast

figuring out my first Zap!

```

**This is when I asked GPT-4.1 to critique the prompt and list the pros and cons.** One of the cons caught my attention because I had felt that same tension while generating tl;drs:

```text

Asking to both "stay close to my words" and "keep storytelling style"

can cause tension for the model: should it paraphrase or quote?

```

I decided to change **"storytelling"** to **"story-like,"** using the same wording as my prompt for correcting posts, which already works well. This seemed to make a small difference.

Sometimes, I noticed **the last sentence was a bit empty** except for the playful aspect. So I added to the directive:

```text

Do not forget to include a key point.

```

Other times, **sentences contained more than one key point**, as in:

```text

I played with Zap errors and used their log tools for fixes.

```

I really don't like this. It's too bloated for me.

So, I added:

```text

- Each sentence contains exactly one key point—no more.

```

At that point I was playing with the following prompt, generating tl;drs and observing what worked and what didn't:

```text

Summarize the blog post below in a "tl;dr" section:

- Use first person and keep the same tone and story like style.

- Do not use bullet points or lists.

- Do not explain or generalize; just capture what I did in this post

as briefly as possible, in my own words.

- The summary must be exactly three short sentences, each under 12

words.

- Each sentence contains exactly one key point—no more.

- End the last sentence with a playful exclamation showing my

enjoyment of the process. Do not invent. Stay close to my words. Do

not forget to include a key point.

- Give me 5 alternatives.

```

I was satisfied, but still, something felt wrong. When it generated this tl;dr for the post [ … ]

```text

I explored agent behaviors by combining various tools and handoff

configurations. I puzzled over the "transfer_to_" prefix and sleuthed

through documentation and code. Getting clarity directly from the

source gratified my inner nerd!

```

I understood the problem; **the vocabulary wasn't mine**. I would never use "puzzled," "gratified," or "nerd," and I don't even know how to pronounce "sleuthed."

Finally, I added the following directive, which solved this last problem:

```text

- Except for technical language, use simple vocabulary.

```

There was one post, [How I realized AI automation is all about what you automate](https://tonyaldon.com/2025-08-06-how-i-realized-ai-automation-is-all-about-what-you-automate/), for which the **tl;dr prompt** wasn't enough to generate what I wanted. Maybe the post wasn't as clear as the others. Anyway, by just prepending the post with this sentence

```text

In making this tldr, focus on the really beautiful first example.

```

the magic happened and GPT-4.1 generated interesting prompts.

#### TL;DR prompt

```text

Summarize the blog post below in a "tl;dr" section:

- Use first person and keep the same tone and story-like style.

- Except for technical language, use simple vocabulary.

- Do not use bullet points or lists.

- Do not explain or generalize; just capture what I did in this post

as briefly as possible, in my own words.

- The summary must be exactly three short sentences, each under 12 words.

- Each sentence contains exactly one key point—no more.

- End the last sentence with a playful exclamation showing my

enjoyment of the process. Do not invent. Stay close to my words. Do

not forget to include a key point.

- Give me 5 alternatives.

```

#### TL;DRs of the first 12 posts

- [How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 2)](https://tonyaldon.com/2025-08-12-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-2/): I rewrote my Zap as a Python polling script. I set up Google APIs and managed authentication for access. Experimenting with this automation was really fun!

- [How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 1)](https://tonyaldon.com/2025-08-07-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-1/): I built my first Zap to send Gmail when Google Sheets updates. I tried intentionally triggering an error with missing fields. Exploring Zapier's logs and troubleshooting was actually fun!

- [How I realized AI automation is all about what you automate](https://tonyaldon.com/2025-08-06-how-i-realized-ai-automation-is-all-about-what-you-automate/): I read some of Elena Alston's articles on Zapier's blog and loved the CRM automation call example. That seamless integration of AI after a sales call really blew me away. This is what makes learning about AI automation so fun!

- [How I uncovered Zapier's best AI automation articles from 2025 with LLMs (part 3)](https://tonyaldon.com/2025-08-02-how-i-uncovered-zapier-best-ai-automation-articles-from-2025-with-llms-part-3/): I filtered and concatenated all articles from 2025, saving them to a markdown file. When hitting token limits with GPT-4.1, I switched to Gemini, running full article and summary-based rankings. Playing with prompts and model limits kept me entertained!

- [How I uncovered Zapier's best AI automation articles from 2025 with LLMs (part 2)](https://tonyaldon.com/2025-08-01-how-i-uncovered-zapier-best-ai-automation-articles-from-2025-with-llms-part-2/): I scraped the Zapier blog to collect article metadata into a JSON file. I then fetched each article, converted it to markdown, and saved it with metadata. The process was smooth, and redirects and errors made the project even more exciting!

- [How I uncovered Zapier's best AI automation articles from 2025 with LLMs (part 1)](https://tonyaldon.com/2025-07-31-how-i-uncovered-zapier-best-ai-automation-articles-from-2025-with-llms-part-1/): I let an LLM pick the Top 10 Zapier AI automation articles for me. I compiled all the articles from 2025, summarized and ranked them with different models. It was a fun experiment in AI-assisted curation!

- [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 4)](https://tonyaldon.com/2025-07-25-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-4/): I reworked the dispute automation to skip all agents. Instead, I directly called the OpenAI Responses API only for the final summaries. Figuring this out was fun!

- [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 3)](https://tonyaldon.com/2025-07-23-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-3/): I set loggers to DEBUG, reran the triage agent workflow, and dug through the raw JSON logs. Seeing each request and response in detail revealed how agents and handoffs really work. Seriously fun!

- [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 2)](https://tonyaldon.com/2025-07-21-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-2/): I explored the OpenAI Agents SDK with different triage agent setups. With extra tools and handoffs, the workflow finally closed disputes itself. Figuring out the handoff naming in the SDK source code was fun.

- [How I learned the OpenAI Agents SDK by breaking down a Stripe workflow from the OpenAI cookbook (part 1)](https://tonyaldon.com/2025-07-18-how-i-learned-the-openai-agents-sdk-by-breaking-down-a-stripe-workflow-from-the-openai-cookbook-part-1/): I started exploring Dan Bell's guide on automating Stripe dispute management with OpenAI Agents SDK. After some Stripe API doc-diving, everything became clear. Cool first session!

- [How I implemented real-time file summaries using Python and OpenAI API](https://tonyaldon.com/2025-07-16-how-i-implemented-real-time-file-summaries-using-python-and-openai-api/): I wrote my first AI-powered auto-summarizer in Python for new text files. After sorting out OpenAI API updates, I added parallel event processing. Watching real-time summaries was super fun!

- [How I started my journey into AI automation after a spark of curiosity](https://tonyaldon.com/2025-07-14-how-i-started-my-journey-into-ai-automation-after-a-spark-of-curiosity/): I saw Zapier hiring AI automation engineers and got curious. Researching similar roles showed me how dynamic the field is. Inspired, I'm starting this blog to share what I'm learning about AI automation.

That's all I have for today! Talk soon 👋

### How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 2)

Source: https://tonyaldon.com/2025-08-12-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-2/

tl;dr: I rewrote my Zap as a Python polling script. I set up Google APIs and managed authentication for access. Experimenting with this automation was really fun!

Once I got **my first Zap working** I wanted to try doing the same thing **in Python using Google APIs**.

In **Zapier**, I noticed that the **trigger** I chose was specified as `instant`. I wondered how Zapier managed to detect spreadsheet changes so fast. **My first thought was to look for push notifications in the Google Sheets API. There isn't.** Google Sheets API doesn't offer such a service.

Then, **I remembered** that when **I authenticated and authorized Zapier** for triggering on row changes, I **had to give access** not just to Google Sheets, but also to **Google Drive**. Now I understood why. **The Google Drive API** does offer [push notifications](https://developers.google.com/workspace/drive/api/guides/push). I suspect this is how Zapier gets notified instantly.

**But to start, I wanted to stick with just the Google Sheets API and Gmail API.**

So **I opted for a polling script** that checks the spreadsheet for changes from time to time.

I ended up writing the [sheets2gmailpolling.py](https://tonyaldon.com/2025-08-12-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-2/#sheets2gmail_polling.py) script, which works like this:

1. Every `POLLING_INTERVAL` seconds, **it retrieves the rows** in `SHEET_NAME` from `SPREADSHEET_ID` (the string between `/d/` and `/edit` in the URL like `https://docs.google.com/spreadsheets/d//edit`).

2. Then, **it checks which rows are new or have been updated by comparing their hashes** (using the `row_hash` function) to the ones stored in the `cached_rows` table of the `cache.sqlite3` database. This is done by `check_rows`.

3. Then, **for every new or updated row found, it updates the cache** in an atomic operation (**updating** `cached_rows` and **queuing emails** to be sent in the `pending_emails` table). This is done by `update_cache`.

4. Finally, **it sends all the queued emails** in `pending_emails`. If an email fails to send three times, it won't try to send it again when the script detects updates or is restarted. This is done by `send_emails`.

Here's the `main` function:

```python

def main():

conn = setup_cache_db(CACHE_DB_FILE)

creds = credentials()

# In case the program previously stopped before sending all the emails

send_emails(conn, creds, FROM_ADDR)

while True:

rows = get_rows(creds, SPREADSHEET_ID, SHEET_NAME)

if not rows or len(rows) == 1:

logger.info(f"No data in spreadsheet {SPREADSHEET_ID}, range {SHEET_NAME}")

else:

new_updated_rows = check_rows(conn, rows)

update_cache(conn, new_updated_rows, len(rows))

send_emails(conn, creds, FROM_ADDR)

time.sleep(POLLING_INTERVAL)

conn.close()

```

(Subscribe for free)[https://tonyaldon.substack.com/embed]

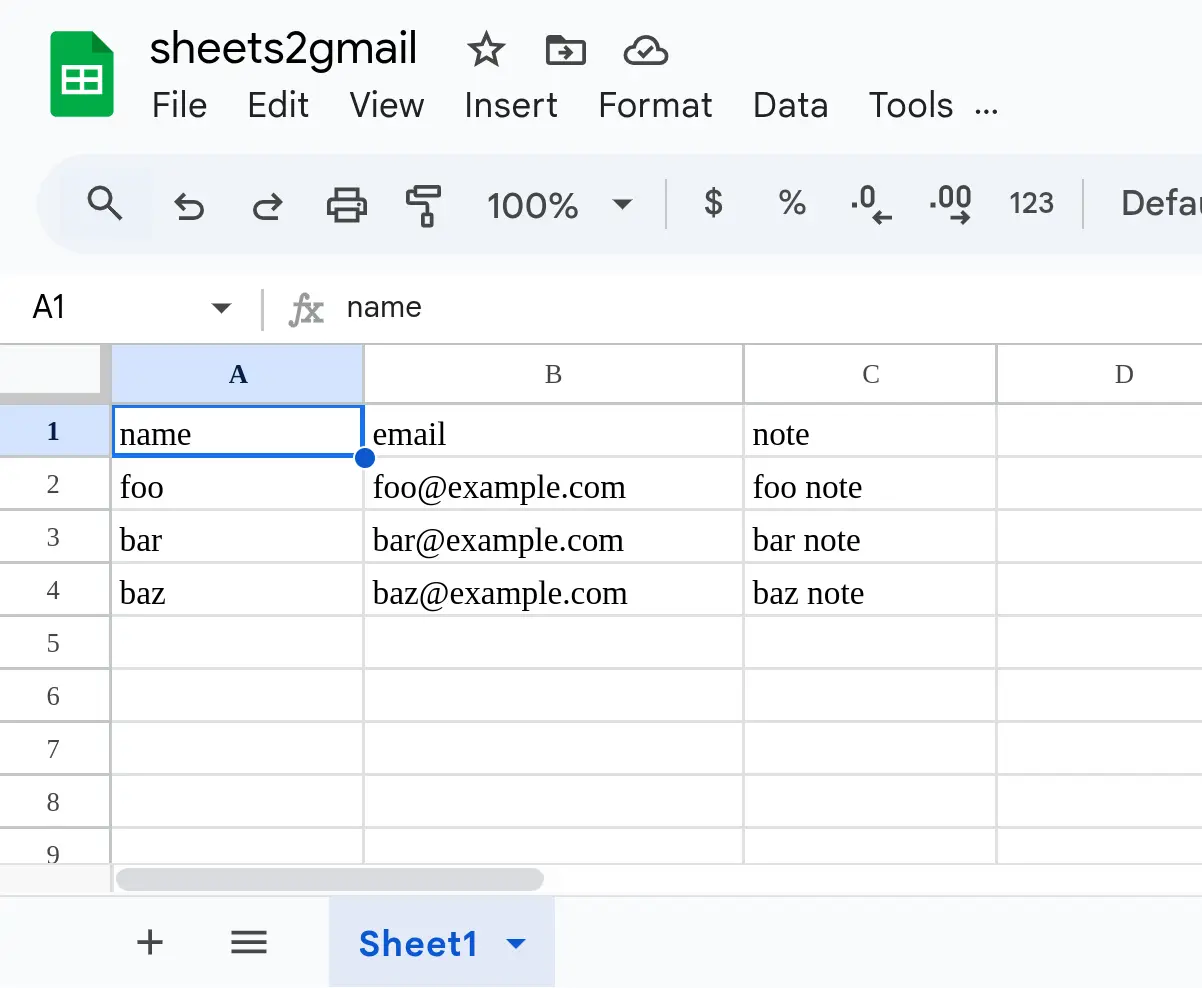

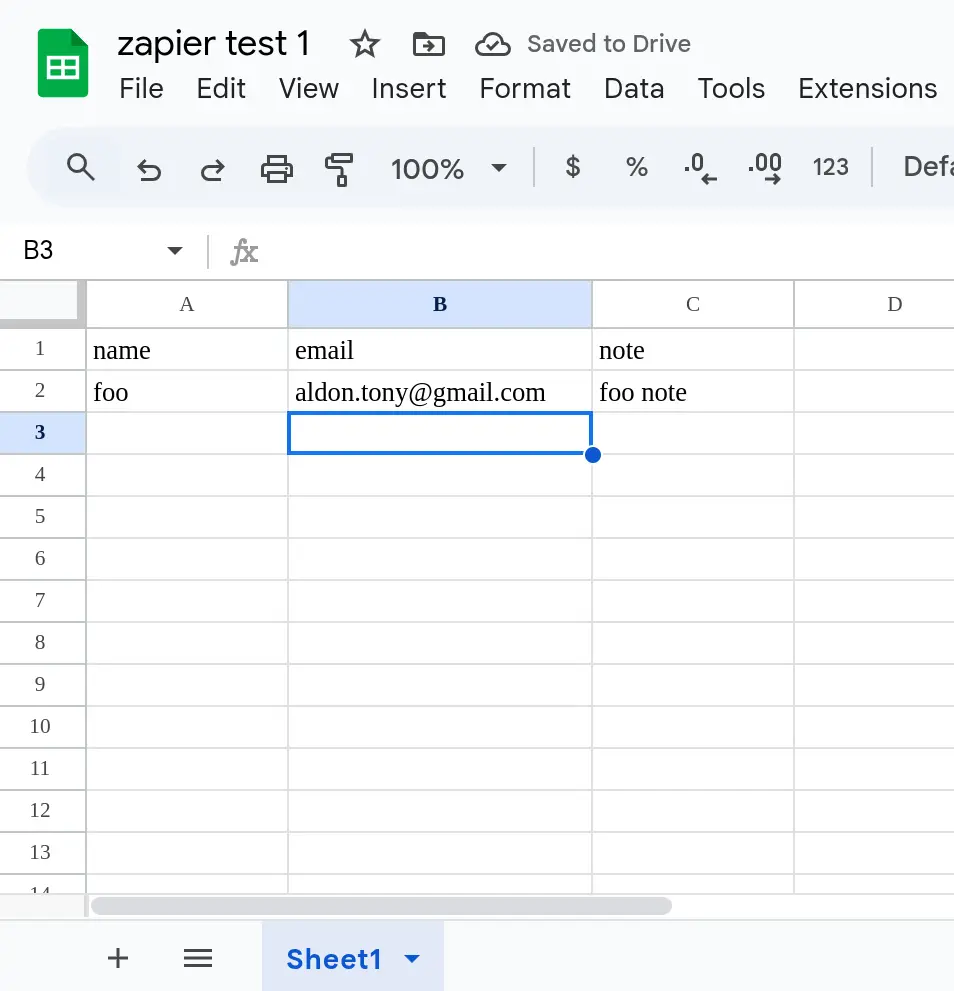

#### The script assumes the spreadsheet is of this form

#### Setting up the sheets2gmail project in the Google Cloud console

In the [Google Cloud console](https://console.cloud.google.com):

1. I **created** the project `sheets2gmail`:

2. In that project, I **enabled** both the **Google Sheets API** and the **Gmail API**:

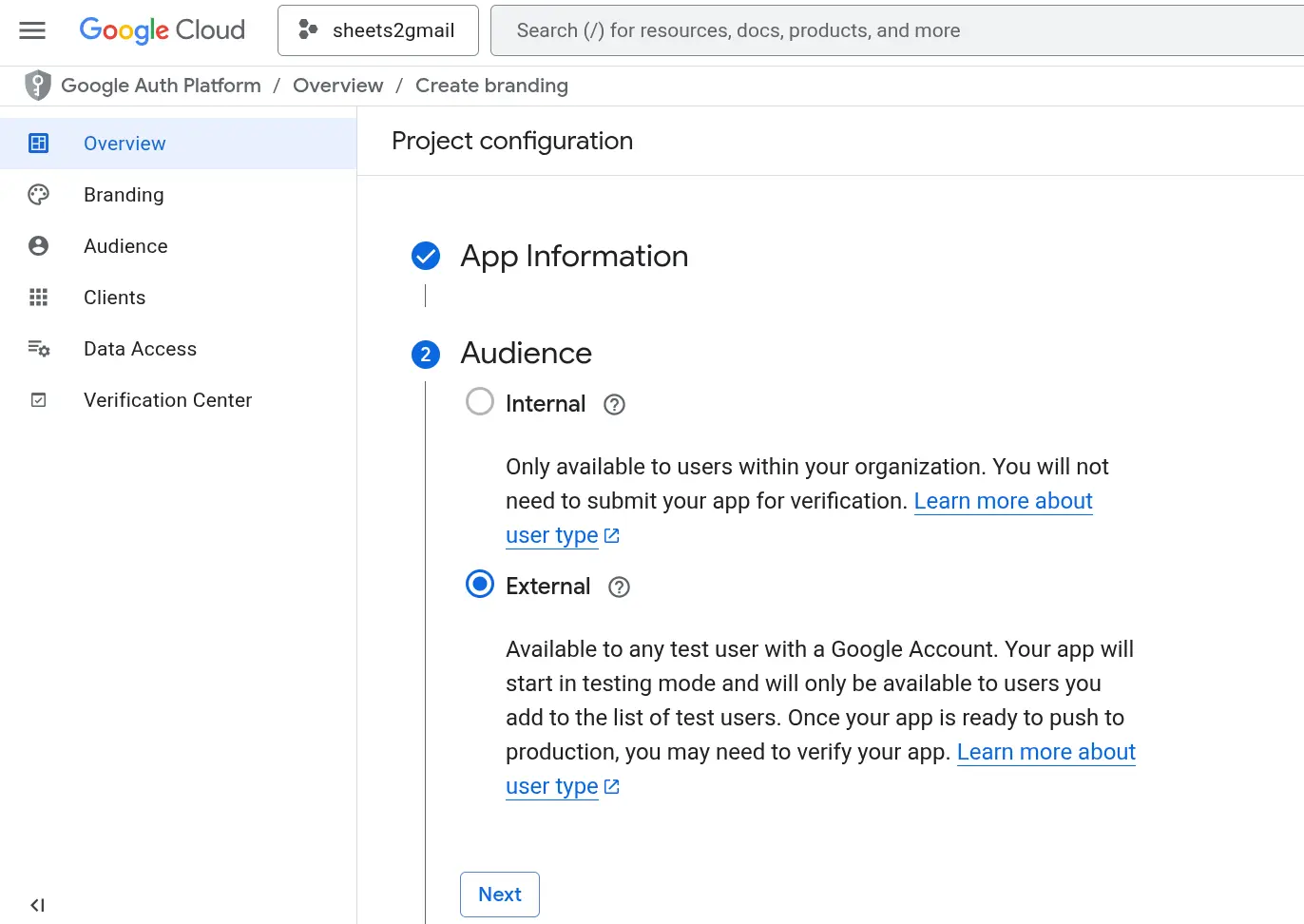

3. I **set up** the **OAuth consent screen** selecting the "External" audience:

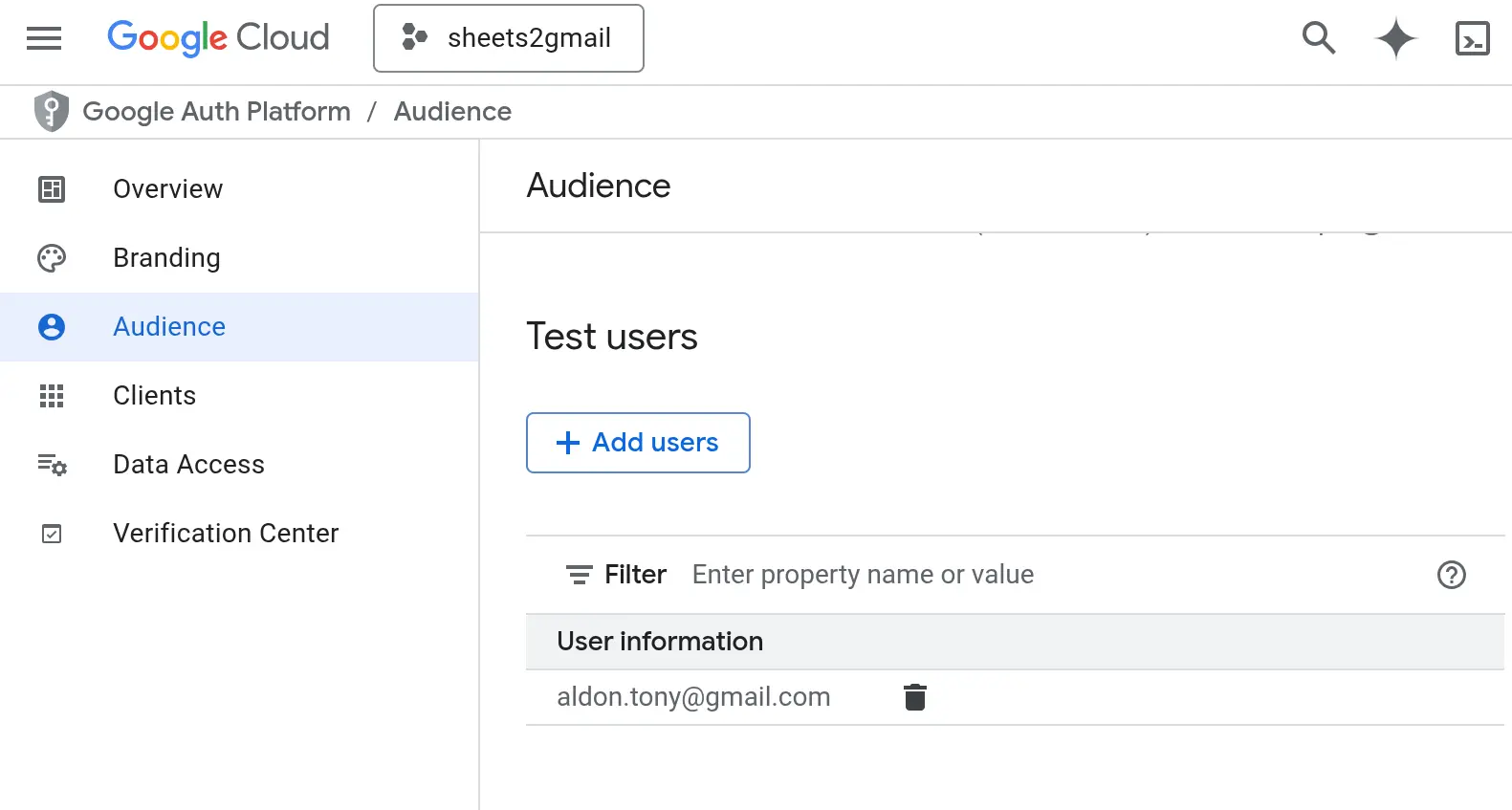

4. I **added** myself as a **test user**:

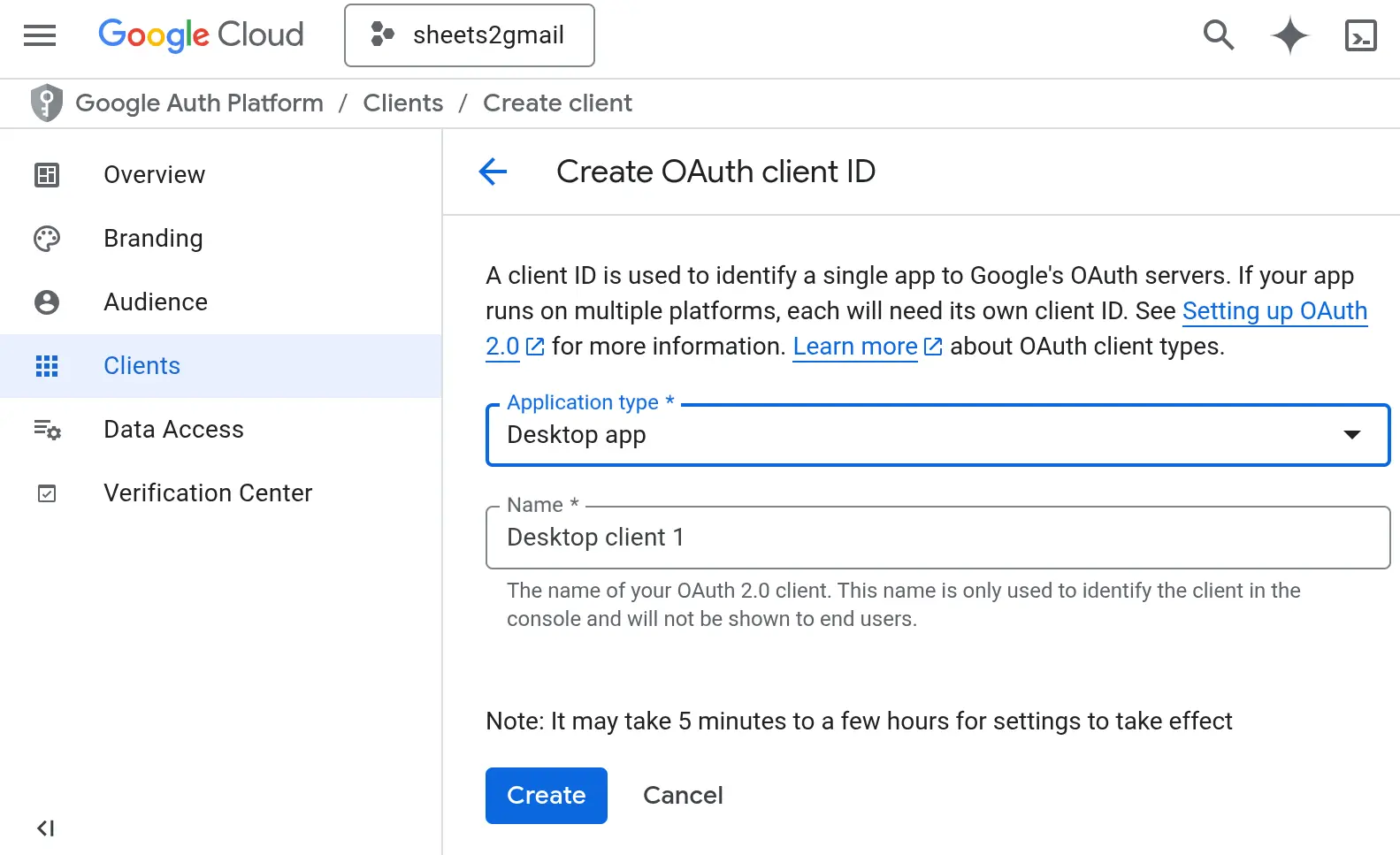

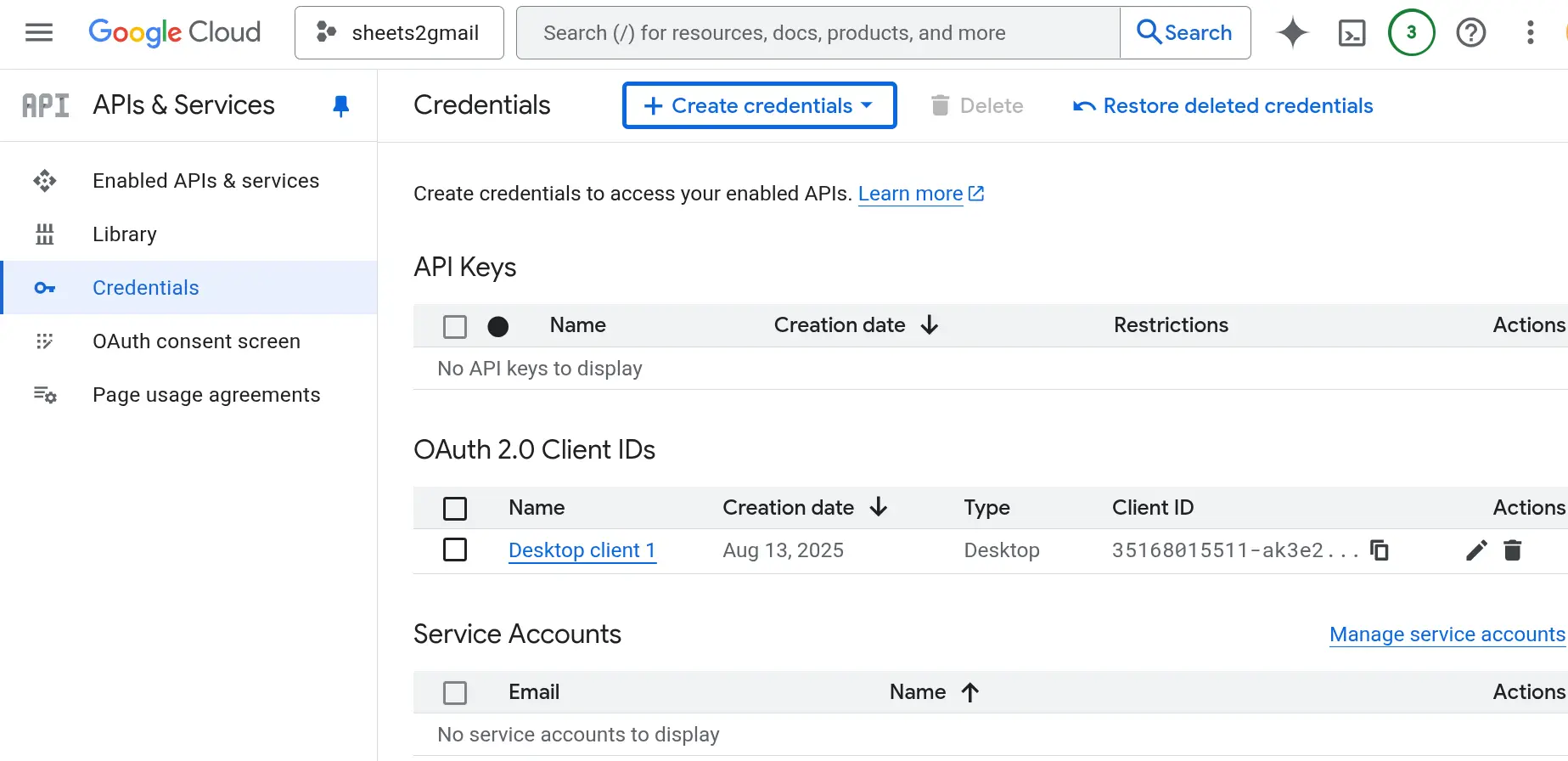

5. Then I **created** an **OAuth client ID**, downloaded it as a JSON file, and saved it my project directory as `credentials.json` (right where `sheets2gmail_polling.py` lives).

##### Note about the credentials

The `credentials.json` I got after creating the OAuth client ID looks like this:

```json

{

"installed": {

"client_id": ".apps.googleusercontent.com",

"project_id": "sheets2gmail-468908",

"auth_uri": "https://accounts.google.com/o/oauth2/auth",

"token_uri": "https://oauth2.googleapis.com/token",

"auth_provider_x509_cert_url": "https://www.googleapis.com/oauth2/v1/certs",

"client_secret": "...",

"redirect_uris": [

"http://localhost"

]

}

}

```

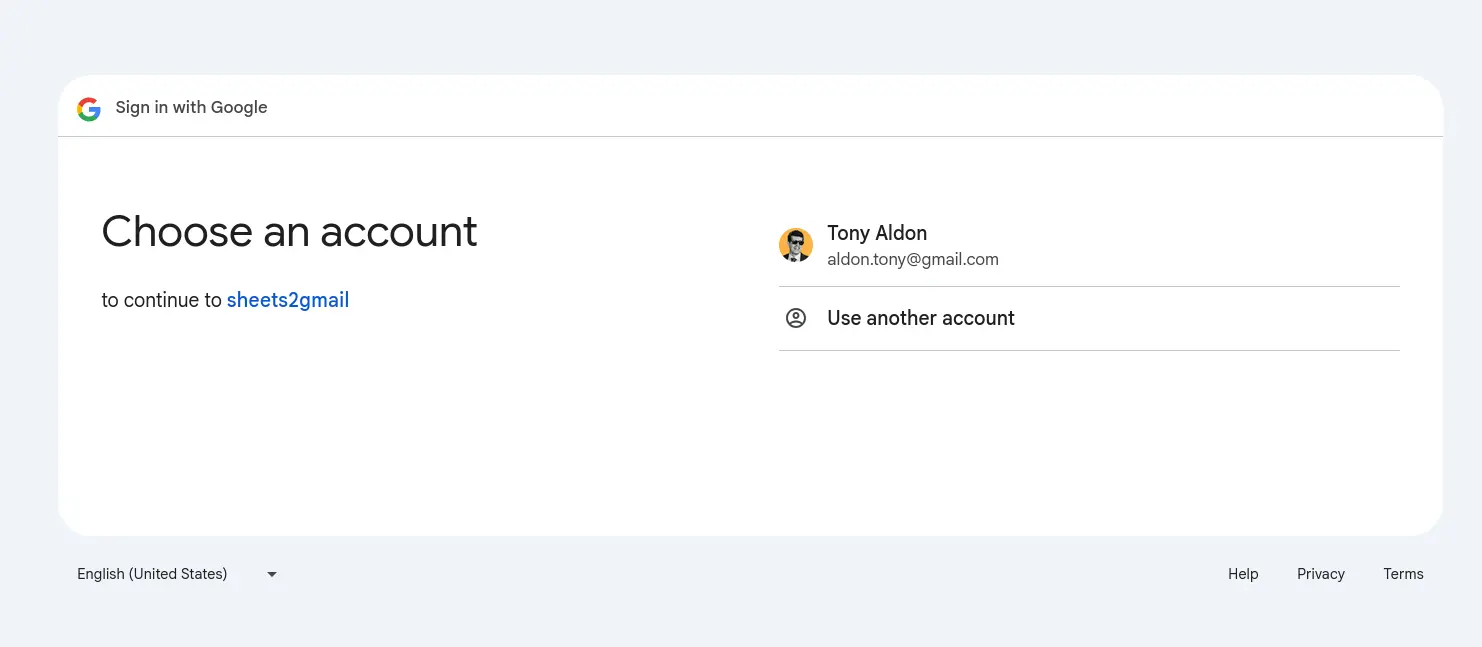

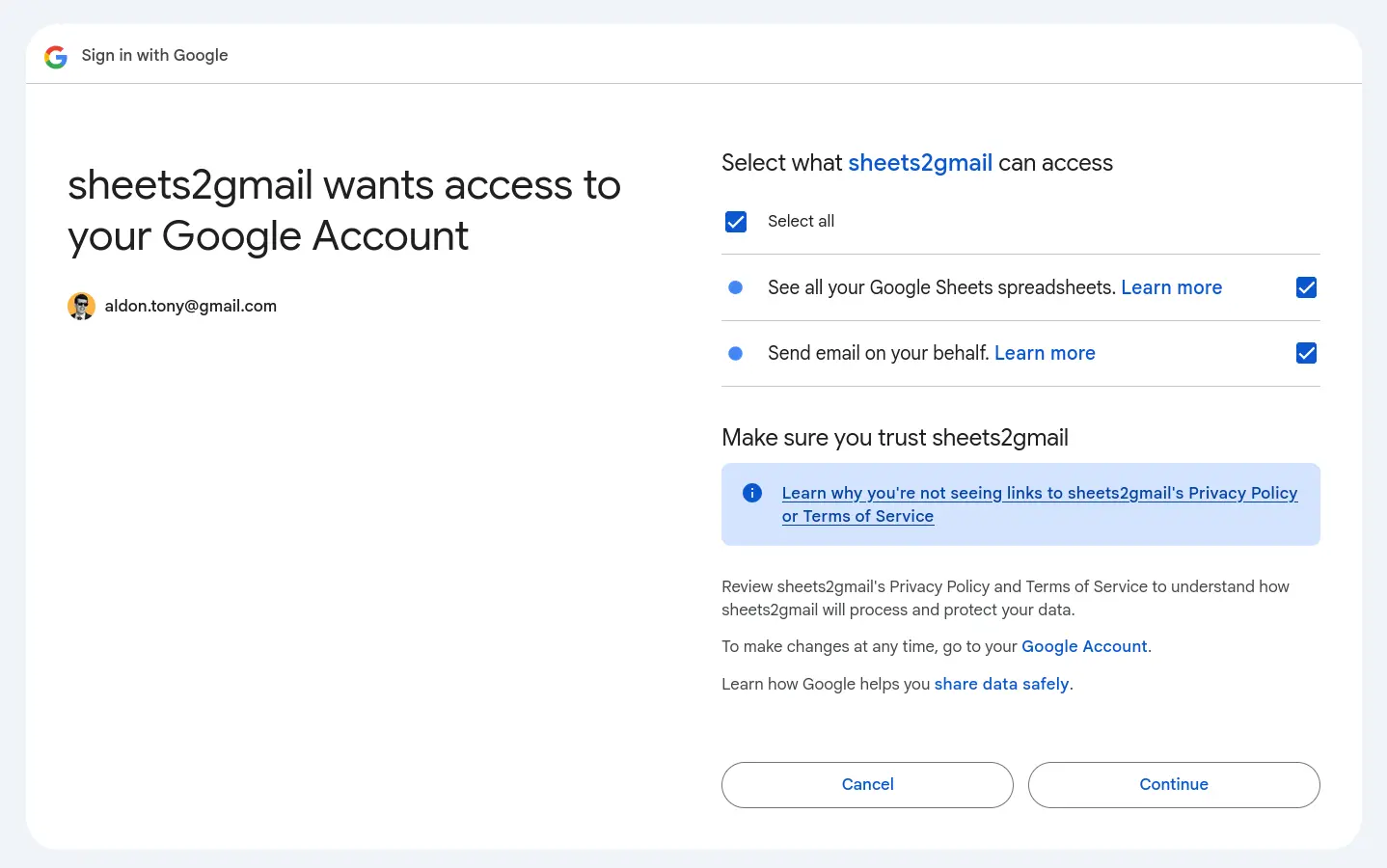

The first time I ran `sheets2gmail_polling.py`, it prompted me to authenticate and approve access for the Google Sheets and Gmail APIs (according to the scopes in the `SCOPES` variable):

```python

SCOPES = ["https://www.googleapis.com/auth/spreadsheets.readonly",

"https://www.googleapis.com/auth/gmail.send"]

```

After authenticating, the script created a `token.json` file. This allows the script to reuse the credentials without prompting me again, even after restarting.

The contents look something like this:

```json

{

"token": "...",

"refresh_token": "...",

"token_uri": "https://oauth2.googleapis.com/token",

"client_id": ".apps.googleusercontent.com",

"client_secret": "...",

"scopes": [

"https://www.googleapis.com/auth/spreadsheets.readonly",

"https://www.googleapis.com/auth/gmail.send"

],

"universe_domain": "googleapis.com",

"account": "",

"expiry": "2025-08-11T10:19:20Z"

}

```

#### sheets2gmail_polling.py

If you want to try the script yourself:

1. Don't forget to create your own `credentials.json`, as described above.

2. Update these variables: `SPREADSHEET_ID`, `SHEET_NAME`, `FROM_ADDR`.

3. Optionally, adjust `POLLING_INTERVAL` if you want it to check more or less frequently.

```tms

$ uv init

$ uv add google-api-python-client google-auth-httplib2 google-auth-oauthlib

$ uv run sheets2gmail_polling.py

```

```python

# sheets2gmail_polling.py

import os.path

import hashlib

import sqlite3

import logging

import time

import base64

from email.message import EmailMessage

from google.auth.transport.requests import Request

from google.oauth2.credentials import Credentials

from google_auth_oauthlib.flow import InstalledAppFlow

from googleapiclient.discovery import build

from googleapiclient.errors import HttpError

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger("sheets2gmail-polling")

# If modifying these scopes, delete the file token.json.

SCOPES = ["https://www.googleapis.com/auth/spreadsheets.readonly",

"https://www.googleapis.com/auth/gmail.send"]

CACHE_DB_FILE = "cache.sqlite3"

POLLING_INTERVAL = 60

SPREADSHEET_ID = "1EpC3YACK-OzTJzoonr-GRS-bEnxM5Qg9bDH9ltQFT3c"

SHEET_NAME = "Sheet1"

FROM_ADDR = "aldon.tony@gmail.com"

def credentials():

"""Get credentials from token.json file.

If not present or invalide, authenticate first using credentials.json file.

See https://developers.google.com/workspace/sheets/api/quickstart/python."""

creds = None

# The file token.json stores the user's access and refresh tokens,

# and is created automatically when the authorization flow completes

# for the first time.

if os.path.exists("token.json"):

creds = Credentials.from_authorized_user_file("token.json", SCOPES)

# If there are no (valid) credentials available, let the user log in.

if not creds or not creds.valid:

if creds and creds.expired and creds.refresh_token:

creds.refresh(Request())

else:

flow = InstalledAppFlow.from_client_secrets_file(

"credentials.json", SCOPES

)

creds = flow.run_local_server(port=0)

# Save the credentials for the next run

with open("token.json", "w") as token:

token.write(creds.to_json())

return creds

def hash_row(row):

"""Return a SHA256 hexdigest of `row`."""

s = '|'.join(str(x) for x in row)

return hashlib.sha256(s.encode()).hexdigest()

def get_rows(creds, spreadsheet_id, sheet_range):

"""Return list of rows in `sheet_range` of `spreadsheet_id`."""

with build("sheets", "v4", credentials=creds) as service:

response = (

service.spreadsheets()

.values()

.get(spreadsheetId=spreadsheet_id, range=sheet_range)

.execute()

)

return response.get("values")

def setup_cache_db(dbfile):

conn = sqlite3.connect(dbfile)

c = conn.cursor()

c.execute('''

CREATE TABLE IF NOT EXISTS cached_rows (

row_index INTEGER PRIMARY KEY,

row_hash TEXT

)

''')

c.execute('''

CREATE TABLE IF NOT EXISTS pending_emails (

id INTEGER PRIMARY KEY AUTOINCREMENT,

email TEXT,

note TEXT,

fail_count INTEGER DEFAULT 0

)

''')

conn.commit()

return conn

def check_rows(conn, rows):

"""Return the list of new or updated rows.

Elements are tuples (, row_index, row_hash, row)

where is \"new\" or \"updated\"."""

c = conn.cursor()

new_updated_rows = []

for idx, row in enumerate(rows[1:]):

# +1 because we don't pass the first row (column names) in rows

# +1 because Google Sheets start counting at 1

idx_sheet = idx + 2

h = hash_row(row)

c.execute("SELECT row_hash FROM cached_rows WHERE row_index=?", (idx_sheet,))

result = c.fetchone()

if result is None:

logger.info(f"Row {idx_sheet} is new: {row}")

new_updated_rows.append(("new", idx_sheet, h, row))

elif result[0] != h:

logger.info(f"Row {idx_sheet} has been updated: {row}")

new_updated_rows.append(("updated", idx_sheet, h, row))

logger.info(f"{len(new_updated_rows)} row(s) new/updated.")

return new_updated_rows

def update_cache(conn, new_updated_rows, sheet_row_count):

"""Update cached_rows table in `conn` listed in `new_updated_rows`.

Add `new_updated_rows` in pending_emails table in `conn`."""

c = conn.cursor()

for state, idx, h, row in new_updated_rows:

if state == "new":

c.execute("INSERT INTO cached_rows (row_index, row_hash) VALUES (?, ?)", (idx, h))

else:

c.execute("UPDATE cached_rows SET row_hash=? WHERE row_index=?", (h, idx))

if len(row) < 2 or row[1] == "":

logger.error(f"No email provided in row {idx}: {row}")

continue

if len(row) < 3 or row[2] == "":

logger.error(f"No note provided in row {idx}: {row}")

continue

c.execute("INSERT INTO pending_emails (email, note) VALUES (?, ?)", (row[1], row[2]))

c.execute("DELETE FROM cached_rows WHERE row_index > ?", (sheet_row_count,))

conn.commit()

def send_email(creds, from_addr, to, content):

"""Send email to `to` with content `content`."""

service = build("gmail", "v1", credentials=creds)

message = EmailMessage()

message["From"] = from_addr

message["To"] = to

message["Subject"] = "Automation Test"

message.set_content(content)

encoded_message = base64.urlsafe_b64encode(message.as_bytes()).decode()

body = {"raw": encoded_message}

send_message = (

service.users()

.messages()

.send(userId="me", body=body)

.execute()

)

return send_message["id"]

def send_emails(conn, creds, from_addr):

"""Send emails listed in pending_emails table in `conn`."""

c = conn.cursor()

c.execute("SELECT id, email, note FROM pending_emails WHERE fail_count < 3")

pending_emails = c.fetchall()

for email_id, to, content in pending_emails:

try:

msg_id = send_email(creds, from_addr, to, content)

logger.info(f"Email {msg_id} sent to {to}.")

c.execute("DELETE FROM pending_emails WHERE id = ?", (email_id,))

conn.commit()

except Exception as e:

logger.error(f"Failed to send to '{to}': {e}")

c.execute("UPDATE pending_emails SET fail_count = fail_count + 1 WHERE id = ?", (email_id,))

conn.commit()

c.execute("SELECT id, email, note FROM pending_emails WHERE fail_count = 3")

dead_emails = c.fetchall()

if dead_emails:

logger.warning(f"Some emails have failed to send after 3 attempts and remain in the `pending_emails` queue: {dead_emails}")

def main():

"""Track changes in `SPREADSHEET_ID`, specifically the sheet `SHEET_NAME`.

Each time a row in `SHEET_NAME` is updated, added or removed,

we send an email from `FROM_ADDR` to row[1] address with

row[2] as content. The subject is hardcoded to 'Automation Test'.

`SHEET_NAME` sheet must have the following shape:

name | email | note

foo | foo@example.com | foo note

bar | bar@example.com | bar note

baz | baz@example.com | baz note"""

conn = setup_cache_db(CACHE_DB_FILE)

creds = credentials()

# In case the program previously stopped before sending all the emails

send_emails(conn, creds, FROM_ADDR)

while True:

rows = get_rows(creds, SPREADSHEET_ID, SHEET_NAME)

if not rows or len(rows) == 1:

logger.info(f"No data in spreadsheet {SPREADSHEET_ID}, range {SHEET_NAME}")

else:

new_updated_rows = check_rows(conn, rows)

update_cache(conn, new_updated_rows, len(rows))

send_emails(conn, creds, FROM_ADDR)

time.sleep(POLLING_INTERVAL)

conn.close()

if __name__ == "__main__":

main()

```

##### Getting an email by its message id

When an email is sent, the script logs its message ID like this:

```text

INFO:sheets2gmail-polling:Email 1989d239d2c9ea33 sent to aldon.tony@gmail.com.

```

You can fetch the contents of a sent email by its ID using the Gmail API, but you'll need to include the scope `"https://www.googleapis.com/auth/gmail.readonly"`.

For instance, for the message ID `1989d239d2c9ea33`:

```python

SCOPES = ["https://www.googleapis.com/auth/gmail.readonly"]

creds = credentials()

service = build("gmail", "v1", credentials=creds)

message = (

service.users()

.messages()

.get(userId="me", id="1989d239d2c9ea33")

.execute()

)

message

# {'id': '1989d239d2c9ea33', 'threadId': '1989d239d2c9ea33', 'labelIds': ['UNREAD', 'SENT', 'INBOX'], 'snippet': 'foo', 'payload': {'partId': '', 'mimeType': 'text/plain', 'filename': '', 'headers': [{'name': 'Received', 'value': 'from 636107077520 named unknown by gmailapi.google.com with HTTPREST; Tue, 12 Aug 2025 00:16:53 -0700'}, {'name': 'Received', 'value': 'from 636107077520 named unknown by gmailapi.google.com with HTTPREST; Tue, 12 Aug 2025 00:16:53 -0700'}, {'name': 'From', 'value': 'aldon.tony@gmail.com'}, {'name': 'To', 'value': 'aldon.tony@gmail.com'}, {'name': 'Subject', 'value': 'Automation Test'}, {'name': 'Content-Type', 'value': 'text/plain; charset="utf-8"'}, {'name': 'Content-Transfer-Encoding', 'value': '7bit'}, {'name': 'MIME-Version', 'value': '1.0'}, {'name': 'Date', 'value': 'Tue, 12 Aug 2025 00:16:53 -0700'}, {'name': 'Message-Id', 'value': ''}], 'body': {'size': 4, 'data': 'Zm9vCg=='}}, 'sizeEstimate': 544, 'historyId': '5510829', 'internalDate': '1754983013000'}

```

Here's the JSON, pretty-printed for readability:

```json

{

"id": "1989d239d2c9ea33",

"threadId": "1989d239d2c9ea33",

"labelIds": [

"UNREAD",

"SENT",

"INBOX"

],

"snippet": "foo",

"payload": {

"partId": "",

"mimeType": "text/plain",

"filename": "",

"headers": [

{

"name": "Received",

"value": "from 636107077520 named unknown by gmailapi.google.com with HTTPREST; Tue, 12 Aug 2025 00:16:53 -0700"

},

{

"name": "Received",

"value": "from 636107077520 named unknown by gmailapi.google.com with HTTPREST; Tue, 12 Aug 2025 00:16:53 -0700"

},

{

"name": "From",

"value": "aldon.tony@gmail.com"

},

{

"name": "To",

"value": "aldon.tony@gmail.com"

},

{

"name": "Subject",

"value": "Automation Test"

},

{

"name": "Content-Type",

"value": "text/plain; charset=\"utf-8\""

},

{

"name": "Content-Transfer-Encoding",

"value": "7bit"

},

{

"name": "MIME-Version",

"value": "1.0"

},

{

"name": "Date",

"value": "Tue, 12 Aug 2025 00:16:53 -0700"

},

{

"name": "Message-Id",

"value": ""

}

],

"body": {

"size": 4,

"data": "Zm9vCg=="

}

},

"sizeEstimate": 544,

"historyId": "5510829",

"internalDate": "1754983013000"

}

```

#### Missing features & advantages in Zapier (according to GPT-4.1)

While I was writing the `sheets2gmail_polling.py` script, **I started noticing** what was missing—and **how much extra work** would be needed—to reach **the level of professional automation that Zapier offers**.

Naturally, I got curious, so when I finished the script, I asked `gpt-4.1` the following:

> What is missing in the Python script compared to a Zap in Zapier.com that makes Zapier preferable?

This was the absolutely expected answer I received:

- **Instant Triggers:** Zapier can use Google Drive's push notifications for near real-time updates, whereas your script is limited to polling on an interval (e.g., every 60 seconds or longer), which may not catch changes as quickly.

- **Error Handling and Notification:** Zapier provides user-friendly error messages, built-in alerts, and automatic retries. If a step fails (e.g., missing required email address), it explains the error and notifies you immediately.

- **Monitoring, Logging, and Troubleshooting:** Detailed run history, centralized logs, and a troubleshooting UI are built-in. You can see what happened in each Zap run, including data passed between steps and the exact reason for failure. Your script just logs messages to a file/console.

- **No-Code Editing:** The workflow can be edited, tested, and expanded with a few clicks—no need to edit code or redeploy.

- **Built-in Authentication, Token Management, and Security:** Zapier manages OAuth tokens and keeps them secure, handling refresh and expiration automatically.

- **Scalability and Reliability:** Zapier is a managed platform—no worries about your server going down, system restarts, or losing state during updates.

- **UI for Workflow Management:** Easy to enable/disable Zaps, manage multiple automations, see a dashboard, get system updates, and more—all through a graphical interface.

That's all I have for today! Talk soon 👋

#### References

- Google Cloud Console

-

- google-api-python-client

-

-

-

-

-

-

- Google Sheets API

-

-

-

-

- Gmail API

-

-

-

-

-

- Google Drive API

-

### How I explored Google Sheets to Gmail automation through Zapier before building it in Python (part 1)

Source: https://tonyaldon.com/2025-08-07-how-i-explored-google-sheets-to-gmail-automation-through-zapier-before-building-it-in-python-part-1/

tl;dr: I built my first Zap to send Gmail when Google Sheets updates. I tried intentionally triggering an error with missing fields. Exploring Zapier's logs and troubleshooting was actually fun!

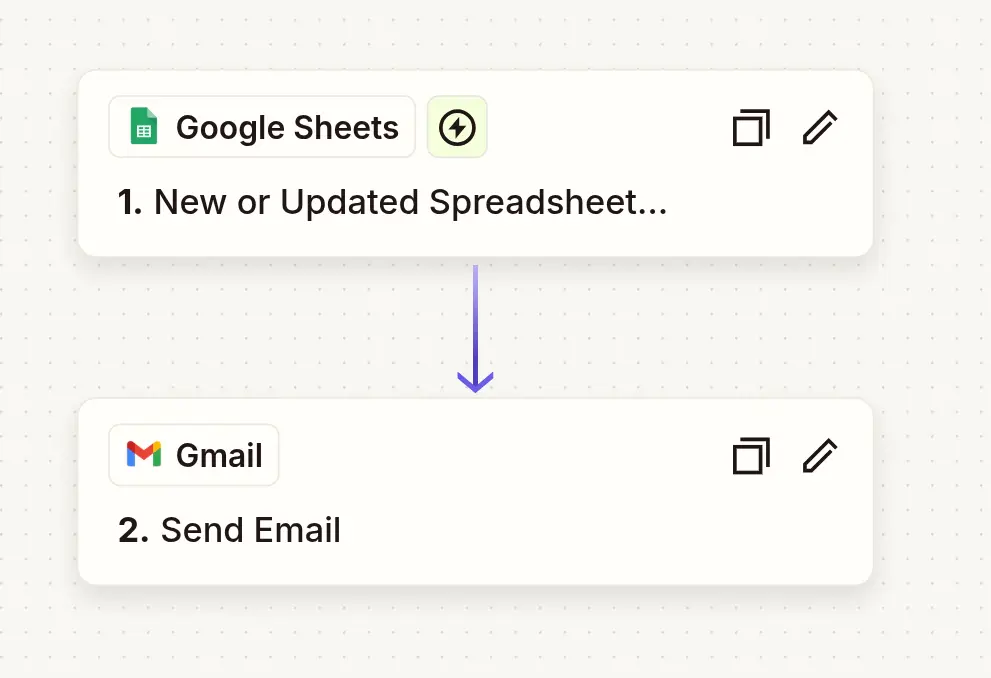

Yesterday, I built and published my first [Zapier](https://zapier.com) Zap with the Zap editor.

**The automation: Send emails via Gmail when Google Sheets rows are updated.**

More precisely, for the "zapier test 1" Google spreadsheet, **whenever a row is added or updated** in the "Sheet1" sheet, **send an email** to the `email` field. The content of the email is taken from the `note` field.

The user experience was smooth and quick.

Remember, a **Zap** is an **automation** made up of one **trigger** and one **action**. In other words: **When this happens, do this.**

Here are the steps I took:

1. I selected the **Gmail** app on page.

2. On the [Gmail integrations](https://zapier.com/apps/gmail/integrations) page, I picked the **Google Sheets** app.

3. I requested more details about the automation: "Send emails via Gmail when Google Sheets rows are updated."

4. On the [description page](https://zapier.com/apps/gmail/integrations/google-sheets/144/send-emails-via-gmail-when-google-sheets-rows-are-updated), I clicked "Try this template." That took me to the [Zap editor](https://zapier.com/editor) with the Google Sheets trigger and Gmail action already selected.

5. I **authorized** **Zapier** to **access** my Google Sheets, Google Drive and Gmail accounts.

6. I selected the "Sheet1" sheet in the "zapier test 1" spreadsheet and specified how to use the data to compose the email.

7. I **ran the tests** for the trigger and the action. Both worked.

8. Then **I published** the Zap.

**Now comes an interesting part.**

I wanted to see how **this Zap behaves** and **how Zapier handles errors**. How can I access the logs? Do they have logs? Are they insightful?

All of this matters because, when everything works, we live in a happy world. **But when systems break, how can we troubleshoot?** This is important, and it's better to think about it before a problem happens rather than after.

This is also what sets apart a product you can rely on from the rest.

**So, here's what I did.**

(Subscribe for free)[https://tonyaldon.substack.com/embed]

I added a row with the `name` as "bar" and left the other two fields empty, just to see what would happen:

- Nothing, if the trigger requires a complete row or

- An error, because the `email` and `note` fields are required.

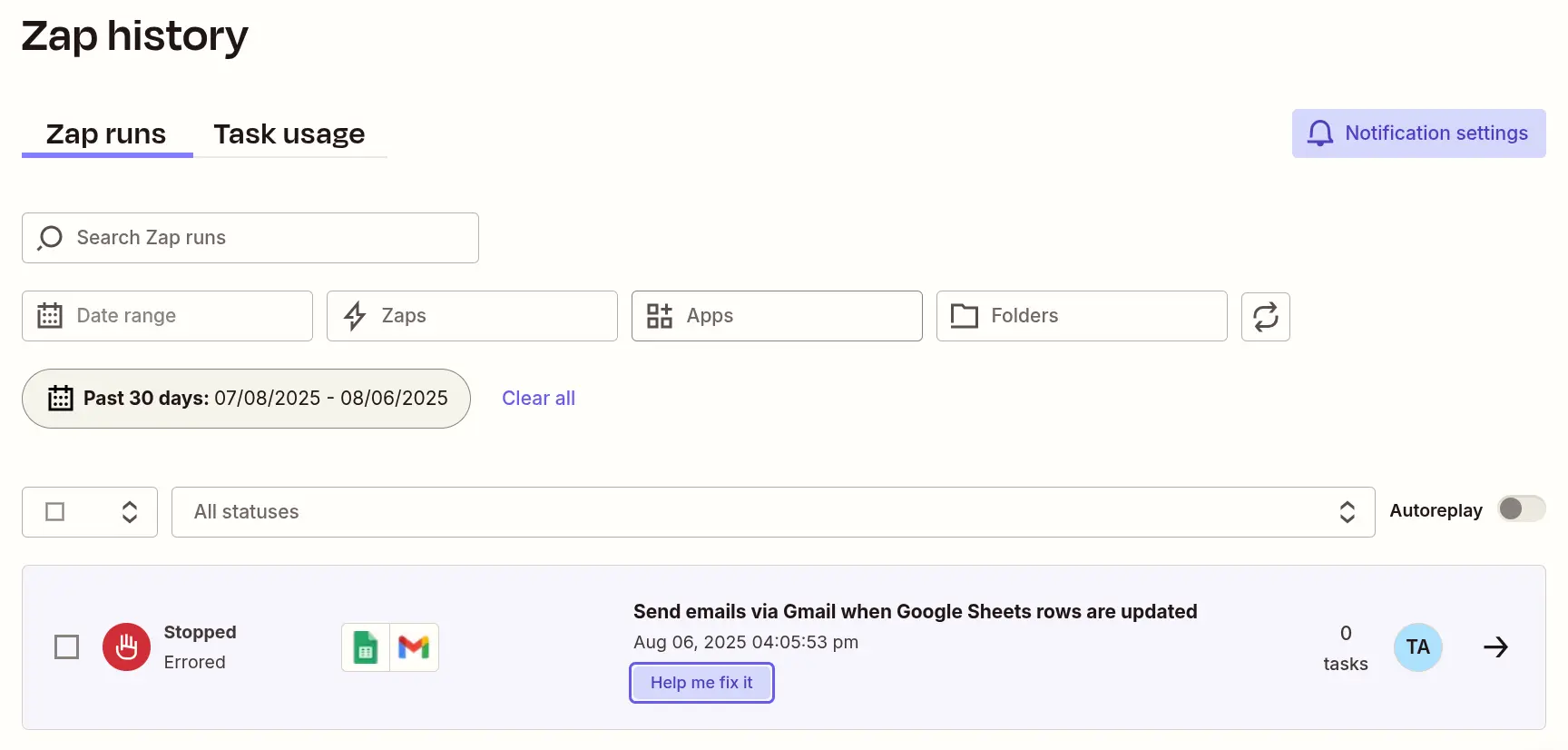

**An error did happen, and I got notified by email**: the `email` and `note` fields are required. I could also see the error logged in [Zap history](https://zapier.com/app/history):

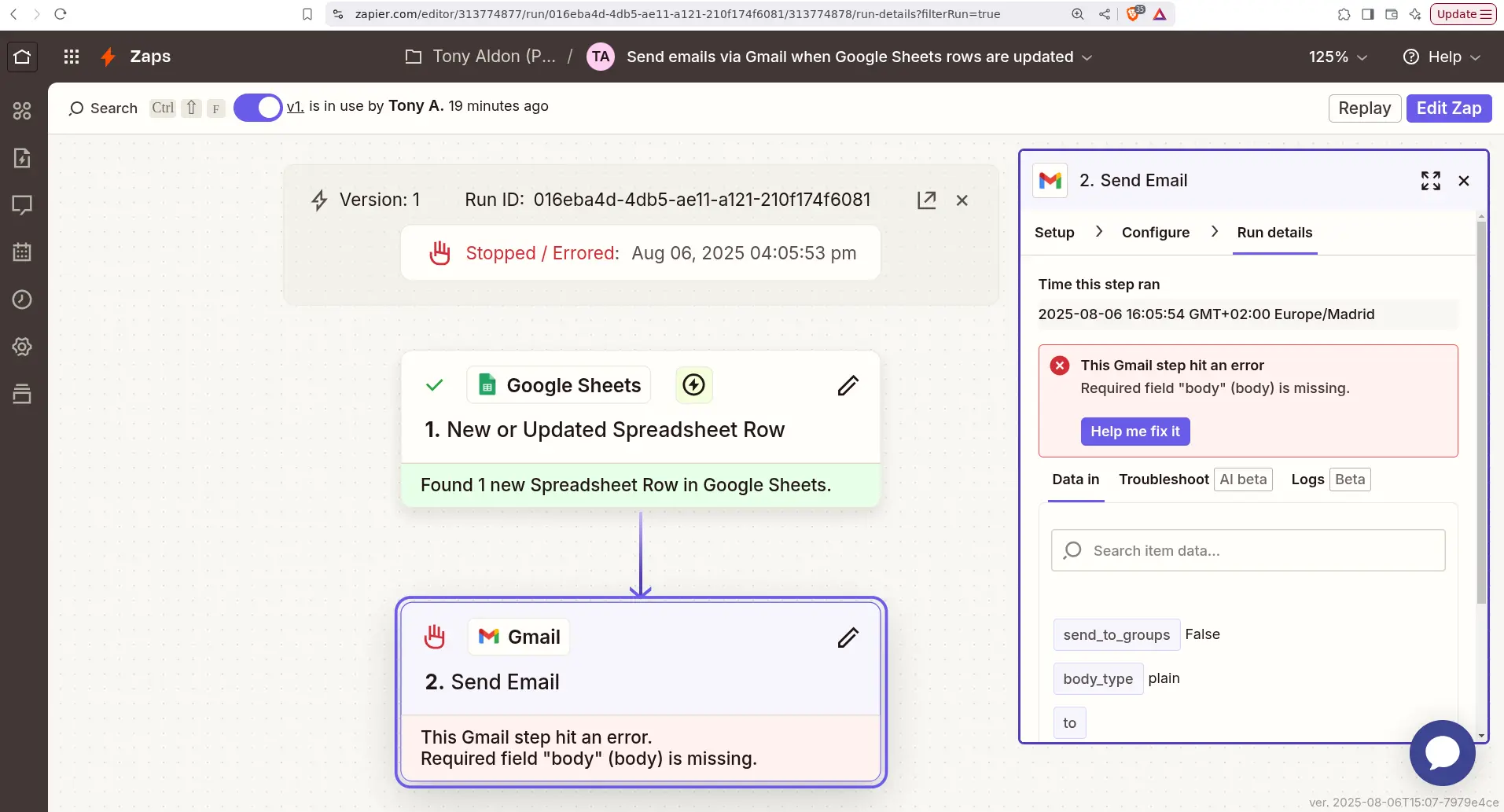

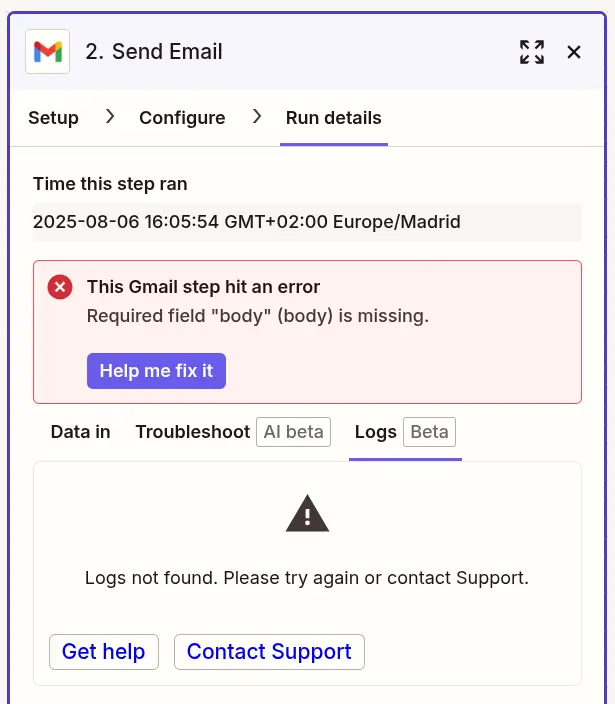

Something cool is that **I could troubleshoot the error** by looking at the corresponding **Zap run** (`016eba4d-4db5-ae11-a121-210f174f6081`) at the **Zap editor**:

```text

This Gmail step hit an error

Required field "body" (body) is missing.

```

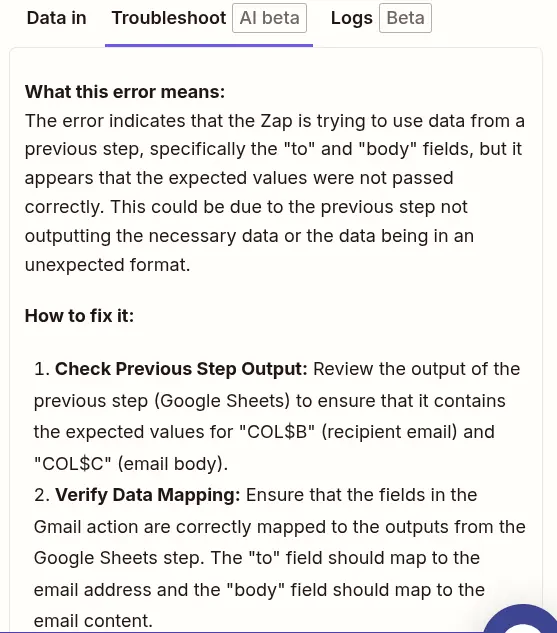

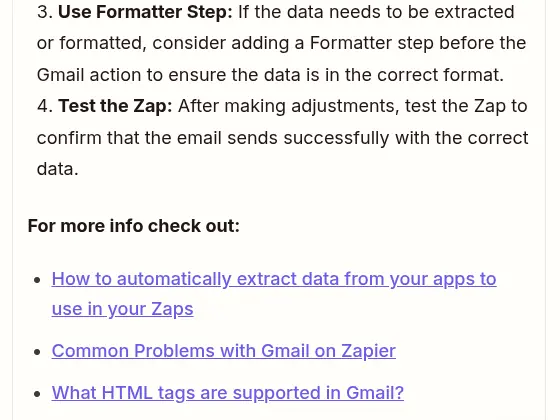

In the Zap editor, I clicked on the "Troubleshoot" tab to get AI-generated details about my error:

> **What this error means:**

>

> The error indicates that the Zap is trying to use data from a previous step, specifically the "to" and "body" fields, but it appears that the expected values were not passed correctly. This could be due to the previous step not outputting the necessary data or the data being in an unexpected format.

>

> **How to fix it:**

>

> 1. Check Previous Step Output: Review the output of the previous step (Google Sheets) to ensure that it contains the expected values for "COL$B" (recipient email) and "COL$C" (email body).

> 2. Verify Data Mapping: Ensure that the fields in the Gmail action are correctly mapped to the outputs from the Google Sheets step. The "to" field should map to the email address and the "body" field should map to the email content.

> 3. Use Formatter Step: If the data needs to be extracted or formatted, consider adding a Formatter step before the Gmail action to ensure the data is in the correct format.

> 4. Test the Zap: After making adjustments, test the Zap to confirm that the email sends successfully with the correct data.

>

> **For more info check out:**

>